Deep Learning Algorithm and Model Design

Understanding the Convolution Layer in CNNs

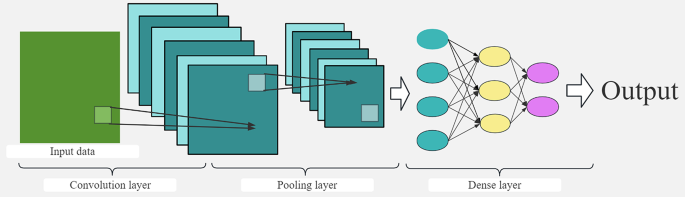

At the heart of convolutional neural networks (CNNs) lies the convolution layer, which employs multiple convolution kernels of varying sizes. This enables the model to process data types like text vectors and effectively capture local features at different granularities. For example, in an Intelligent Political Education (IPE) context, the analysis of micro-expressions in students or the processing of text content is significantly enhanced by the extraction of these local features.

In this setting, local text characteristics like word choice and sentence structure can be instrumental in discerning a student’s cognitive state or disposition towards a specific topic during discussions. CNNs excel in performing this task efficiently, enabling educators to interpret students’ feedback more accurately.

Modeling Student Behavior and Spatial Data

When it comes to understanding spatial dimensions—like recognizing students’ engagement levels in class—the CNN’s convolutional layers can adeptly manage spatial information. This capability allows for precise identification of various states of classroom participation—such as concentration, confusion, and enthusiasm—thus forming a basis for tailored teaching strategies. The aggregation of such data not only informs teaching methods but also promotes a more engaging educational experience for students.

The Role of LSTM in Time-Dynamic Learning Environments

Moving from local feature extraction to understanding sequences in time, Long Short-Term Memory (LSTM) networks excel in modeling serial data. They are exceptional at capturing the evolving processes of student knowledge acquisition and cognitive development over time through their unique memory units. For instance, LSTM can analyze various time-based data, such as academic performance and homework completion sequences, effectively predicting students’ future learning needs.

The LSTM’s strength lies in its ability to remember critical information from past interactions, making it a crucial tool for comprehending long-term learning trends and student ideologies. By combining insights from early learning milestones with ongoing educational progress, teachers can offer more nuanced guidance and intervention.

CNN-LSTM Hybrid Model: A Comprehensive Approach

To harness both the spatial feature extraction power of CNNs and the time-series processing ability of LSTMs, integrating these two architectures into a hybrid model offers profound advantages. The combination enables robust analysis of IPE data, allowing for spatio-temporal feature fusion that provides a richer understanding of learners’ needs.

As an example, the hybrid model can dynamically assess a student’s real-time classroom expressions alongside their performance trends during different learning phases. This integration helps transition from a "one-size-fits-all" educational model to "precise drip irrigation" that caters to individual student needs.

Limitations of Other Models

Compared to simpler architectures like feedforward neural networks (FNN), the hybrid CNN-LSTM model offers far greater efficacy in managing complex data involving both sequence and local features. While basic recurrent neural networks (RNNs) can process sequences, they lack the long-term dependency capture that LSTMs provide. Conversely, transformer architectures—though adept at managing long sequences—often come with high computational costs, making them less efficient for specific educational contexts.

In light of these considerations, employing CNNs and LSTMs in a hybrid model emerges as a highly practical solution, optimizing performance in educational data applications.

Designing the IPE System with Deep Learning

Data Collection and Preprocessing

In the pursuit of enhancing university IPE systems through deep learning, a detailed approach is essential. This begins with the collection of diverse data from various channels such as lecture notes, homework submissions, discussion forum posts, and online assessments. These data points form a comprehensive dataset essential for training accurate deep learning models.

The data undergoes rigorous preprocessing, which includes steps like text cleaning, word segmentation, removal of stop words, and the application of word embedding techniques, such as Word2Vec. This process transforms raw text into a vectorized format suitable for computation and analysis by deep learning models.

Constructing the CNN-LSTM Hybrid Model

Once the data is prepared, the next step is to construct the CNN-LSTM hybrid model. The CNN layer operates primarily to extract local features while the LSTM layer manages the sequential dependencies inherent in the texts. This architecture allows the model to gain a thorough understanding of educational content.

Training the model involves employing techniques like backpropagation and gradient descent optimization. Additional measures, such as early stopping and regularization techniques, are applied to mitigate overfitting and enhance the model’s generalization capabilities.

Experimental Setup and Hyperparameter Optimization

Data Set Overview

The IPE dataset comprises approximately 5GB of information, encapsulating millions of records of learning behaviors and interactions from thousands of students. Its diversity is reflected in varied data sources and types of student engagement, making it crucial for realistic model training.

Hyperparameter Tuning Techniques

To maximize the CNN-LSTM hybrid model’s performance, an optimization process synergizing grid search and random search methods is employed. This approach aims to fine-tune a range of key hyperparameters, including learning rate, batch size, convolutional kernel size, and the number of layers. Through systematic experimentation, optimal values for these parameters are identified.

Ethical Considerations and Data Privacy

In all phases of research, especially concerning sensitive student data, strict adherence to ethical guidelines is paramount. Ensuring anonymization of student data, implementing encryption methods during data handling, and securing informed consent from participants fosters a responsible data collection process. This framework promotes transparency and builds trust while also safeguarding individuals’ privacy throughout the research.

By integrating advanced modeling techniques and ethical considerations, the IPE system aims to not only enhance learning experiences but also maintain a respectful and secure educational environment.