The Development and Evaluation of the LLM Rating Scale

The emergence of large language models (LLMs) has opened new avenues for improving psychological therapies. This article explores the meticulous process behind developing the LLM rating scale, which seeks to enhance the evaluation of patient engagement during therapy through a structured, multi-stage approach.

Structured Data Preparation

The initial phase of this project involved the automated transcription of psychological therapy sessions. Utilizing a local pipeline, the sessions were diarized and segmented, ensuring that patient privacy was at the forefront. This privacy-preserving approach allowed for data to be prepared at scale, creating a robust foundation for further analysis.

Once segmented, the transcripts were paired with an initial pool of 120 theoretically derived items. These items were then rated using a local large language model (LLM). The psychometric selection pipeline refined this pool, filtering items for distributional properties, reliability, and validity.

Strategies Against Inflated Estimates

To guard against inflated estimates from item selection on the same data, two complementary strategies were implemented:

-

Repeated 3-Fold Cross-Validation: This method ensured strict independence between item selection, performed on training folds, and psychometric evaluation, executed on test folds. By maintaining this separation, the integrity of the evaluations was upheld.

- Bootstrap Optimism Correction: Alongside cross-validation, this technique adjusted performance metrics based on variations between bootstrap samples and the original dataset. This dual approach provided a lens through which the final scale’s generalizability and robustness could be assessed, countering the potential risk of overfitting.

Patient and Therapist Dynamics

The sample for the study comprised 1,131 session transcripts collected from 155 patients, with an average age of 36.37 years (SD = 13.95) and a gender distribution of 61.9% female. The selection criteria for patients included the provision of informed consent by both patients and therapists, as well as a minimum of four transcribed sessions.

The average patient attended 7.3 sessions (SD = 3.03). Diagnoses were varied but primarily centered around depressive disorders (52.6%), panic/agoraphobia (13.0%), and adjustment disorders (11.7%). Noteworthy is that all sessions were conducted in German, facilitated by 95 therapists whose average age was 28.01 years (SD = 4.20) and 84.6% were female. These professionals held master’s degrees in psychology and possessed at least 1.5 years of clinical experience, ensuring quality treatment throughout the study.

Ethical Considerations

Ethical adherence was a priority in this study, with all methods aligned with the relevant guidelines and regulations. The ethics committee of Trier University approved the project. Rigorous protocols ensured that patient confidentiality remained intact, with therapy transcripts securely stored and processed locally, thereby avoiding third-party data transmission or cloud services.

Treatment Framework

From 2016 to 2024, participants engaged in personalized integrative cognitive-behavioral therapies (CBT) at a university clinic in Southwest Germany. Therapists received training in manualized treatments and were supervised by licensed cognitive behavior therapists with extensive clinical experience. Each individual therapy session lasted 50 minutes and was conducted weekly, establishing a routine that supported patient engagement.

Measuring Engagement

Patients completed a variety of questionnaire batteries at different intervals — at intake, post-treatment termination, after every fifth session, and via short scales before and after each session. This systematic approach allowed therapists to evaluate progress and outcomes continuously.

Measures of Engagement Determinants

Engagement determinants, such as motivation and therapeutic alliance, were assessed rigorously:

-

Motivation was evaluated after session 15 using a motivation subscale from the Assessment for Signal Clients (ASC). This subscale, rated on a five-point Likert scale, comprised nine items guiding patients to reflect on their treatment feelings. Reliability for this scale was strong (α = 0.83).

- Alliance was derived from both patient and therapist perspectives using the Bern Post-Session Report, and its reliability was notable (α = 0.86 for patients and α = 0.87 for therapists).

Engagement Processes

Engagement processes evaluated both within-session and between-session efforts.

-

Within-Session Effort: Assessed through patient-rated problem coping, evaluated via the BPSR. This measure demonstrated high reliability (α = 0.91) at session 15.

- Between-Session Effort: Determined through therapist evaluations of patient’s willingness to engage in therapy between sessions, contributing to a holistic understanding of engagement.

Engagement Outcomes

To measure treatment outcomes, symptom severity was assessed using the Outcome Questionnaire-30 (OQ-30) at session 25, roughly ten sessions post the average transcribed session. This assessment provided a comprehensive evaluation of psychological functioning, with excellent reliability statistics (α = 0.95).

Software Framework Utilization

The study applied the DISCOVER framework, a sophisticated tool for data-driven observations, supporting various data types and workflows without requiring extensive technical expertise. This framework integrates with tools such as NOVA for visualizing data streams and machine learning models to facilitate deeper insights into patient engagement.

Transcript Preparation and Analysis

Therapy sessions were recorded clearly to facilitate accurate automated transcription using a protocol ensuring audio quality. The transcription process utilized WhisperX for generating high-quality transcripts, achieving a word error rate (WER) of 26.76 and maintaining a high semantic alignment between automated and manual transcripts.

Scale Development Process

The scale’s development adhered to best practices for creating psychometric measures. Beginning with an item pool generated through conceptual frameworks, the LLM then processed therapy transcripts. The resulting ratings were gathered in a systematic pipeline aimed at ensuring psychometric integrity.

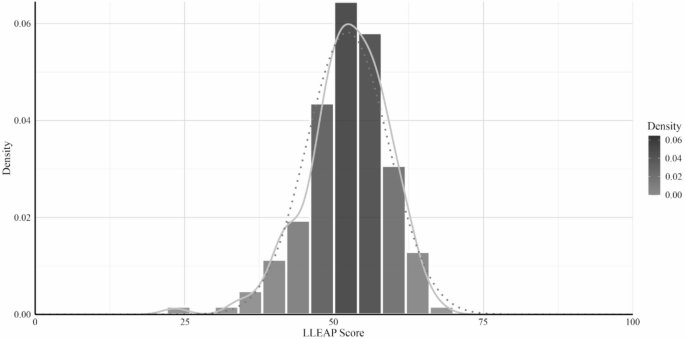

Item Selection and Scale Evaluation

The rigorous evaluation of selected items involved psychometric analysis that scrutinized scale distribution, reliability, model fit, and validity. A multi-faceted approach using descriptive statistics, confirmatory factor analysis, correlation analyses, and multilevel modeling ensured that the final scale met stringent psychometric criteria for deployment in real-world therapy contexts.

Continuous validation through bootstrap methods and repeated k-fold cross-validation fortified the scale’s reliability and predictive power, establishing a robust foundation for future applications in therapeutic practice.

The LLM rating scale represents a significant step in leveraging technology to optimize therapeutic practices, contributing substantially to the landscape of psychology and mental health treatment.