Evaluating MLLM-SRec: A Comprehensive Study on Multimodal Sequential Recommendations

In the evolving landscape of recommendation systems, the introduction of Multimodal Large Language Models (MLLMs) has generated immense interest, particularly for sequential recommendation (SR) tasks. The MLLM-SRec model presents a novel approach to enhance SR capabilities through the effective integration of diverse data modalities, aiming to address specific research questions regarding its performance and component impacts.

Research Questions Explored

This article investigates four key research questions regarding the performance and efficacy of MLLM-SRec:

- RQ1: How does MLLM-SRec perform compared to state-of-the-art LLMs-based recommendation methods?

- RQ2: Do different modalities and their combinations more accurately capture users’ dynamic preferences?

- RQ3: What is the effect of different components of MLLM-SRec on its overall effectiveness?

- RQ4: How do various configurations of fine-tuning strategies impact MLLM-SRec’s performance?

Experimental Settings

Dataset Description

MLLM-SRec’s performance evaluation employs four widely recognized open-source datasets from Amazon Reviews. The datasets entail user behaviors across diverse product categories, providing a rich foundation for assessing recommendation effectiveness. In this study, we focused on categories like baby products, sports, beauty, and toys, defining positive and negative samples based on rating thresholds. A balanced negative sampling strategy ensured diverse interaction records while maintaining a clear division between training, validation, and testing datasets.

Baseline Methods

To comprehensively evaluate MLLM-SRec, the model was compared against various baselines, including traditional Sequential Recommendation (SR) models like SASRec and BERT4Rec, multimodal recommendation models such as MMSR and MMGCN, and LLM-based models like GPTRec and TALLRec. Each baseline contributes unique methodologies for capturing user preferences, setting the stage for analyzing MLLM-SRec’s distinct advantages.

Evaluation Metrics

The performance of MLLM-SRec, along with its baseline counterparts, was measured using three critical ranking-based evaluation metrics: Hit Rate at K (HR@K), Normalized Discounted Cumulative Gain at K (NDCG@K), Recall at K (Recall@K), and Area Under the Curve (AUC). Ensuring standardized candidate item set sizes across all methods allowed for fair comparisons.

Implementation Details

We executed experiments utilizing an Ubuntu server equipped with advanced GPU technology. The backbone models used in MLLM-SRec are open-source LLMs from Hugging Face, and we employed advanced techniques for parameter-efficient fine-tuning. This rigorous setup aimed to ascertain clear experimental outcomes while mitigating the impact of randomness.

Overall Performance (RQ1)

Experimental results reveal that MLLM-SRec consistently surpasses various baseline models, particularly excelling over traditional SR techniques and other LLM-based methods. The quantitative metrics illustrated a significant positive performance shift, with MLLM-SRec showing, on average, a 9.90% improvement across datasets compared to the strongest baseline. This performance verification underscores the effectiveness of MLLM-SRec in leveraging multimodal data for enhancing recommendation tasks.

Research findings indicate that pre-trained MLLMs exhibit extensive world knowledge and advanced capabilities for interpreting complex user intents, rendering them highly effective in extracting and aligning multimodal information. This heightened comprehension facilitates the accurate capturing of dynamic user behavior preferences.

Ablation Study

Impact of Different Input Modalities (RQ2)

Examining the influence of various modalities on MLLM-SRec’s performance highlighted the superiority of combining text and image inputs. The findings indicated that while single modalities provided valuable insights, the integration of multimodal data significantly boosted recommendation accuracy. Particularly, the synergy between textual and visual inputs allowed MLLM-SRec to align content effectively, enhancing user understanding and overall performance.

Impact of Components in MLLM-SRec (RQ3)

To gauge the contributions of individual components, several MLLM-SRec variants were examined. For example, the model without the Image Captioning component (MLLM-SV) still showed commendable performance compared to variants relying solely on unimodal inputs. This evaluation underscored the importance of integrated multimodal functionality for capturing comprehensive user preferences.

Impact of Fine-Tuning Strategy Selection (RQ4)

The investigation of fine-tuning techniques like QLoRA and CoT further refined the understanding of model performance. Results demonstrated marked improvements following the application of multiple alignment strategies, highlighting the necessity of tailored fine-tuning to ensure the model’s adaptability to multimodal recommendation tasks.

Addressing MLLM Hallucinations

One intriguing aspect of MLLM-based recommendation systems is the phenomenon of hallucinations—instances where the model generates outputs that lack grounding in the input data. This study employed the CLIP cross-modal semantic distance metric to evaluate and mitigate the impact of hallucinations in MLLM-SRec. The results exhibited MLLM-SRec’s robustness against input anomalies, affirming the model’s capability to maintain stable performance amidst potential data noise.

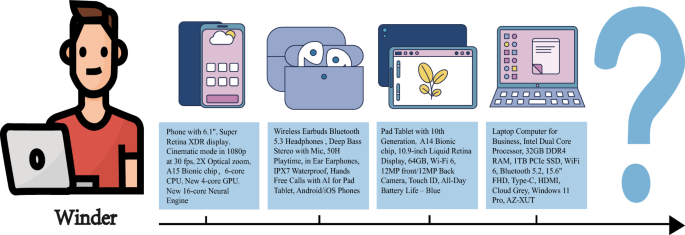

Case Study

A detailed case study illustrated the practical application of MLLM-SRec in generating personalized recommendations based on multimodal interaction data sourced from a campus services platform. By effectively processing sequential user inputs, MLLM-SRec demonstrated its capacity to deliver relevant item recommendations, validating its functionality in real-world settings.

Through this exploration of MLLM-SRec, we gain valuable insights into the effectiveness of multimodal integration for sequential recommendations, setting a precedent for future advancements in recommendation technologies.