Advanced Object Recognition Using YOLOE in Computer Vision

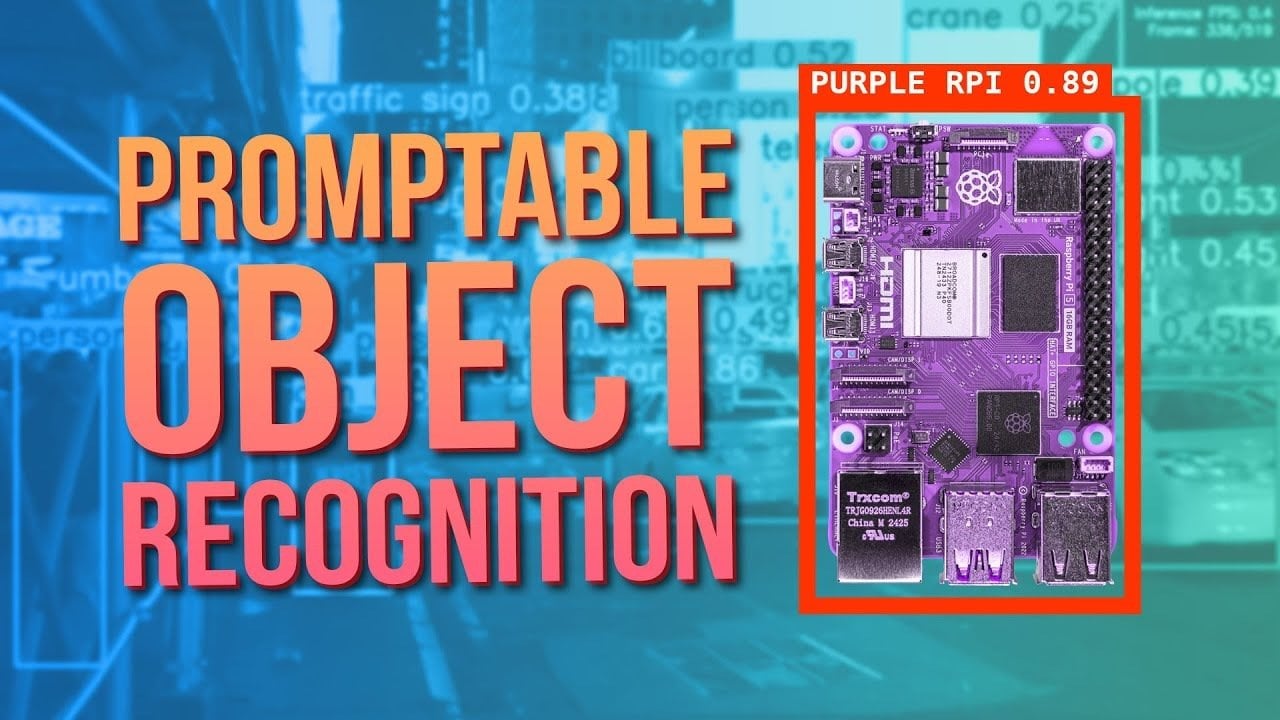

What if you could teach a computer to recognize a zebra without ever showing it one? Imagine a world where object detection isn’t constrained by endless training data or high-powered hardware. Enter YOLOE, the latest evolution in computer vision that flips the script on how machines “see.” Unlike traditional models that require extensive training and mountains of labeled images, YOLOE leverages pre-trained visual concepts to identify objects it has never encountered before. This is more than an upgrade; it’s a paradigm shift that democratizes advanced object detection, allowing it to appeal to developers, researchers, and hobbyists alike, even when using low-power devices like the Raspberry Pi 5.

This article explores how YOLOE is redefining the boundaries of computer vision. You’ll delve into how its zero-training approach opens doors for innovative detection tasks. From recognizing objects based on attributes like shape and color to seamlessly integrating with a variety of hardware, YOLOE isn’t just another tool—it’s a vision of what’s possible when innovation meets simplicity. Could this be the key to unlocking the next surge of AI-driven creativity? Let’s explore.

YOLOE: Transforming Object Detection

TL;DR Key Takeaways

- YOLOE revolutionizes computer vision by using pre-trained visual concepts, which eliminates the need for extensive retraining and enhances accessibility.

- It introduces promptable vision, allowing detection through text or image-based prompts, recognizing objects based on attributes like shape, color, and texture.

- Designed for low-power devices like the Raspberry Pi 5, YOLOE supports various formats, ensuring compatibility with a range of hardware configurations.

- Applications vary from DIY robotics and home automation to object tracking and custom detection tasks.

- Despite some challenges with obscure items or complex scenes, YOLOE remains an effective tool for general-purpose detection, offering adaptability and efficiency.

How YOLOE Stands Apart from Traditional YOLO Models

Traditional YOLO (You Only Look Once) models heavily depend on vast datasets of labeled images to learn how to recognize specific objects. This training process can be resource-intensive, often requiring advanced hardware and considerable computation time, which limits accessibility to those with ample resources.

YOLOE introduces a radical change by utilizing pre-trained visual concepts. Instead of starting from scratch, it identifies objects based on their attributes—shape, color, and texture. For example, YOLOE can recognize a zebra by assessing its “striped” and “four-legged” features, even if it has never encountered a zebra before. This drastically reduces the necessity for retraining, leading to faster and more adaptable object detection that can be molded for a wider array of applications.

Key Features and Innovations

YOLOE offers a suite of features that enhance its functionality and effectiveness in object detection tasks:

-

Promptable Vision Model: Users can detect objects through text or image-based prompts, allowing for enhanced interaction. For example, specifying “red car” will guide the model in its detection process.

-

Visual Concept Understanding: By breaking down objects into recognizable attributes, YOLOE can detect them without requiring new datasets for retraining.

-

Custom Prompts: YOLOE allows users to define detection tasks based on specific prompts, making it adaptable to diverse scenarios.

- Extensive Object Recognition: Thanks to its pre-trained knowledge, YOLOE can identify thousands of objects, offering exceptional versatility.

These capabilities make YOLOE a formidable tool for developers and hobbyists by providing unprecedented flexibility and efficiency in object detection tasks.

YOLOE Computer Vision for Developers and Hobbyists

With YOLOE’s intuitive setup and features, it’s not only professionals who benefit; hobbyists and developers can easily leverage its capabilities for their projects. Whether you’re interested in robotics, home automation, or even artistic installations, this model equips you with the necessary tools to bring your ideas to life.

Performance and Adaptability Across Platforms

YOLOE is crafted to deliver robust performance across a broad spectrum of platforms, including resource-constrained devices like the Raspberry Pi 5. Key areas of adaptability include:

-

Format Compatibility: It supports formats like ONNX and NCNN, ensuring seamless integration with varied hardware configurations.

-

Resolution and Model Size Optimization: Users can tailor settings for detection accuracy and processing speed, which is vital for achieving real-time performance.

- Low-power Applications: The lightweight YOLOE architecture is suitable for energy-sensitive environments, making it ideal for IoT devices and other portable systems.

These features illustrate the versatility of YOLOE, which can fit a wide range of use cases—ranging from high-performance environments to energy-efficient, edge-based operations.

Challenges and Limitations

While YOLOE pushes many boundaries in object detection, it’s important to acknowledge its limitations. The model can struggle with obscure or highly specific objects that lack clear visual attributes. In complex scenes—especially those with overlapping or ambiguous objects—false positives may occur. Nevertheless, YOLOE excels at general-purpose detection tasks, thanks to its wide recognition capabilities and adaptability.

Practical Applications of YOLOE

YOLOE’s versatility makes it well-suited for numerous real-world applications, especially those requiring real-time detection and flexibility:

-

Raspberry Pi 5 Projects: Coupled with a camera module, YOLOE can enable object detection for DIY robotics, home automation, and surveillance.

-

Object Counting and Tracking: It is useful for counting objects or tracking locations in real-time, applicable in inventory management, traffic monitoring, and event analysis.

- Custom Detection Tasks: Users can adapt YOLOE by utilizing text or image-based prompts, allowing for tailored identification relevant to their specific needs.

These applications underline the practical advantages of YOLOE, showcasing its potential to streamline processes and boost efficiency across various domains.

Getting Started with YOLOE

Setting up YOLOE can be straightforward, making it accessible to users with varying technical backgrounds. Here’s a brief guide:

-

Installation: Start by installing the YOLO Ultralytics package.

-

Configuration: Set up Python scripts tailored to your detection tasks.

-

Flexibility: YOLOE supports both text and image-based prompts, letting you define detection parameters.

- Optimization: Utilize tools for resolution adjustment and model size tuning to meet the specifications of your hardware.

Whether you’re working on a powerful setup or a more modest device, YOLOE places the necessary tools at your fingertips to embark confidently on your detection projects.

Advancing Object Detection with YOLOE

In summary, YOLOE marks a considerable advancement in computer vision technology. By eradicating the need for extensive retraining, it simplifies custom object detection, making it more accessible to users with limited resources. Its efficiency on low-power devices, broad recognition capabilities, and overall adaptability position YOLOE as a valuable asset across a multitude of applications. Whether experimenting with real-time detection projects or creating innovative solutions on platforms like the Raspberry Pi 5, YOLOE provides a powerful, efficient, and user-friendly approach to meet your unique demands.