Women’s Representation in Science, Technology, Innovation, and Policy: A Data-Driven Exploration Using Machine Learning

Introduction to the Research Study

In recent years, the fields of science, technology, innovation, and policy (STIP) have come under scrutiny for their representation of women. Understanding the dynamics that govern this representation across countries is critical for promoting inclusivity.

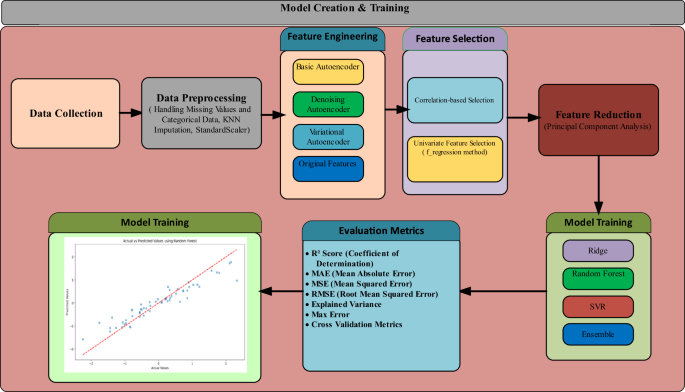

This study employs a comprehensive machine learning framework to analyze women’s representation in STIP across 60 countries. By leveraging a well-structured dataset containing both numerical and categorical features, we aim to glean insights into the factors influencing the participation of women in STIP.

Dataset Overview and Methodology

Our dataset comprises five numerical features and one categorical feature, focusing on key metrics like the Percentage of Women in STIP (PWS). Given the challenges posed by missing data—particularly 1.70% for STEM degrees and a significant 33.30% for business degrees—we used KNN imputation for rectification. This data preprocessing step ensured our analysis maintained integrity.

Feature Engineering

To maximize the model’s predictive capabilities, we employed three types of autoencoders: basic, variational, and denoising. These techniques expanded our feature space from the original six to 27 columns. After feature extraction, a rigorous selection process that combined Random Forest Feature Importance, LASSO regression, and Sequential Feature Selection was conducted to pinpoint the most significant variables affecting PWS.

Additionally, dimensionality reduction techniques—including correlation analysis and Principal Component Analysis (PCA)—were utilized, retaining 95% of the variance while optimizing the input space.

Sensitivity Analysis for Robustness

We implemented several techniques to validate the robustness of our models. Among them, cross-validation stability testing, bootstrapping, and outlier sensitivity analyses helped quantify the model performance variability. Through these methods, we assessed the impact of parameters under various conditions efficiently.

Feature importance was gauged through permutation testing and controlled perturbation experiments, allowing us to identify influential predictors and measure the model’s sensitivity to noise. Data subsampling analysis revealed how model performance scales with varying training sample sizes, thereby establishing minimum data requirements for reliable deployments.

Evaluation of Regression Models

Various combinations of features were explored across a range of regression models—including Ridge Regression, Support Vector Regression (SVR), Linear Regression, ElasticNet, and Lasso Regression. We employed 10-fold cross-validation for robust model evaluation, while hyperparameter optimization was executed using GridSearchCV to fine-tune configurations like C values, kernel types for SVR, and regularization strengths for Ridge.

The models were assessed using multiple metrics such as ( R^2 ), Mean Absolute Error (MAE), and Root Mean Squared Error (RMSE).

Statistical Framework and Visual Analysis

Our study employed comprehensive descriptive statistics to unpack the complexities surrounding women’s participation in STIP. Notably, box plot visualizations illustrated relationships between diversity quotas and women’s participation.

We closely examined cross-sector interactions through scatter plots while using heatmaps to quantify correlation coefficients. This dual approach allowed for an in-depth exploration of relationships and trends within our dataset.

Sensitivity Analysis Techniques

In order to validate the accuracy and reliability of our models, we conducted extensive sensitivity analyses. Given the limited sample size of 60, various techniques were employed to ensure model robustness and performance reliability.

Sample Size Sensitivity Testing

We explored performance metrics in relation to varying sample sizes, including conditions where models trained on 30%, 50%, and 70% of the data. Each set of experiments was conducted rigorously to delineate learning curves and clarify performance thresholds.

Bootstrapping

Through bootstrap resampling, we assessed model uncertainty. By creating multiple samples and evaluating their performance, we derived mean values and confidence intervals important for small datasets.

Feature Perturbation and Outlier Analysis

To gauge the model’s sensitivity to noise, we implemented a feature perturbation strategy, systematically introducing noise to individual features. Meanwhile, outlier sensitivity analyses helped clarify how outliers affected model predictions, leading to enhanced stability.

Quality Control Framework

Quality assurance was integral throughout the study, encompassing systematic outlier detection, consistency checks, and validation of imputed values against domain knowledge. The authors maintained rigorous documentation of all methodologies and transformations, ensuring reproducibility and reliability.

Implementation and Detailed Documentation

Throughout the research process, the authors ensured meticulous records of all methodological steps and analytical procedures. This included data preprocessing, model parameters, and validations, facilitating transparency and creating a pathway for future replication and exploration.

This rigorous framework of machine learning, underpinned by statistical validation methods, provides a nuanced understanding of women’s representation in STIP, contributing vital insights into an important area that straddles diversity, equity, and inclusivity in a contemporary global context.