PPML Methodology for Privacy-Preserving Medical Image Analysis

In the realm of healthcare, the safeguarding of sensitive patient information is of paramount importance. With the advent of machine learning (ML), particularly in applications like medical image analysis, a pressing need arises for methodologies that can ensure privacy while maintaining performance. This article explores the integration of the PPML methodology with the Concrete ML framework, specifically leveraging Fully Homomorphic Encryption (FHE) for secure training and evaluation of models on confidential medical image data. Our focus revolves around utilizing the Torus Fully Homomorphic Encryption scheme as we apply it to the MedMNIST dataset, aiming for a deep understanding of privacy-preserving techniques without compromising accuracy.

Concrete ML and Fully Connected Neural Networks (FCNN)

Concrete ML serves as an open-source Privacy-Preserving Machine Learning (PPML) framework designed to protect patient data throughout the ML pipeline. Within this framework, the Fully Connected Neural Network (FCNN) is harnessed for medical image classification tasks. Unlike traditional Convolutional Neural Networks (CNNs), which require cleartext data for computations, the FCNN utilized in this study is engineered for FHE compatibility, allowing the model to function effectively even when processing encrypted inputs.

One of the foremost challenges is constructing a neural network that adheres strictly to the specifications of FHE. Here, model architecture is designed and validated to ensure compatibility with encrypted data, providing a vital mechanism to assess and fine-tune accuracy while navigating the constraints posed by encryption.

Neural Network Training for TFHE Compatibility

The primary goal in this research is to ensure the FCNN accommodates the intricacies of FHE protocols during its training phase. Although training typically occurs with unencrypted data, this study takes a unique approach by utilizing a simulation phase to replicate the encrypted model’s behavior in a plaintext environment. This strategy accelerates development by permitting early assessments of model accuracy and functional verification, thus mitigating the overhead associated with encryption.

The Role of Activation Functions and Architecture

Within our FCNN structure, layers are densely interconnected, forming a web that captures intricate data patterns from medical images. The model employs activation functions like Rectified Linear Unit (ReLU) and Sigmoid to introduce nonlinearity, allowing it to adaptively learn complex relationships within the dataset. The output layer then delivers classification labels corresponding to detected medical conditions.

Utilizing the Concrete ML framework, the FCNN model leverages a user-friendly scikit-learn interface through the NeuralNetClassifier class. This allows for easy specification of architecture parameters, like the number of layers and neurons, enhancing model configurability and adaptability to specific tasks.

Quantization-Aware Training (QAT) for FHE

To fine-tune the FCNN for operating under FHE constraints, Quantization-Aware Training (QAT) is employed. This technique optimizes the model by adjusting weights and activation bit-widths, making it compatible with the low precision values required by FHE. The QAT process involves minimally configuring parameters to effectively update quantized weights during training, allowing for a judicious balance between model complexity and performance.

During the training process, evaluated weights are updated in accordance with the selected loss function, which integrates standard error measurement and regularization. This dual focus on accuracy and model efficiency is crucial to maintain robust performance while complying with encryption demands.

Pruning as a Strategic Optimization Tool

Pruning plays a critical role in refining the FCNN for FHE compatibility. The technique reduces the overall complexity of the neural network by controlling accumulators’ bit-widths, ensuring that the model’s operations avoid overflow errors during computations. Weights identified as non-essential are set to zero, which significantly optimizes performance metrics while still allowing the model to effectively learn from the dataset.

Secure Layer Computation via TFHE

Every layer of the FCNN is constructed to execute computations over encrypted data. Inputs, weights, biases, as well as intermediate activations, are quantized into low-bitwidth integers and encrypted through TFHE’s Learning With Errors (LWE) scheme. Such measures ensure that confidentiality is not compromised, while still allowing for homomorphic evaluations of neurons and activation functions.

Through these secure mechanisms, the processed encrypted data generates predictions without allowing any plaintext exposure, reinforcing the integrity of patient information.

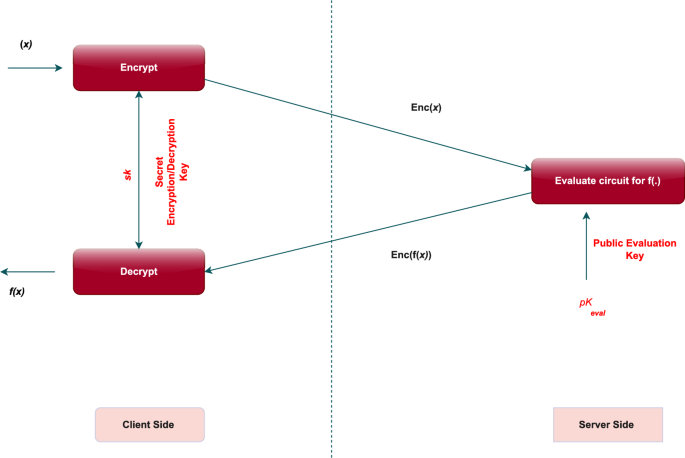

Compilation and Inference Process

The journey into deploying the FCNN model for real-world applications begins with the compilation phase. Here, the trained model undergoes transformations to become compatible with FHE, ready for direct execution on encrypted data. The benefits of this careful design are immense, making it possible to perform accurate predictions securely without revealing sensitive information.

The inference process itself consists of several key steps where encrypted medical images are securely analyzed, leveraging the FHE-compatible model on the server side while ensuring that the client side retains control over encryption and decryption tasks. Each stage of the pipeline is meticulously designed to preserve patient confidentiality while delivering meaningful insights from medical data.

With this structured approach, the deployment of secure models for privacy-preserving medical image analysis becomes more attainable, offering a comprehensive pathway to merging machine learning with robust patient data protection strategies. The collaboration of advanced encryption techniques with effective machine learning frameworks provides a compelling solution for modern privacy concerns in healthcare.