AI-Driven Music Generation and Transcription: Exploring Deep Learning Approaches

In recent years, artificial intelligence (AI) has revolutionized various fields, and music is no exception. AI-driven music generation and transcription utilize deep learning models to create, analyze, and transcribe music. This article dives deep into how these technologies work, focusing on dataset preparation, preprocessing techniques, model architectures (specifically LSTM, Transformers, GANs, and CNNs), training pipelines, hyperparameter optimization, and evaluation techniques.

Dataset Preparation: The MAESTRO Dataset

The foundation for many AI music projects lies within the quality of data used for training. The MAESTRO dataset, developed by the Magenta team at Google, serves as a rich resource for deep learning models aimed at music transcription and generation. Spanning over 200 hours of classical piano recordings from 2004 to 2018, it includes high-definition audio paired with MIDI files, ensuring precise timing and detailed musical annotations.

Key Features

- Audio Format: The dataset includes FLAC stereo format audio at 44.1 kHz with 16-bit resolution, providing high-quality sound data essential for training models.

- MIDI Files: Each MIDI file encapsulates musical expressions through intricate details such as note onset times, durations, velocities, and pedal data. This wealth of information allows AI systems to learn expressive musical elements.

- Structural Organization: The MAESTRO dataset is divided into training (80%), validation (10%), and testing (10%) segments, facilitating thorough evaluations of model performance.

Table 1 succinctly outlines the structure and features of the MAESTRO dataset, enhancing understanding for researchers.

The Importance of MIDI and Audio Pairing

Pairing MIDI files with audio recordings in MAESTRO allows AI models to learn from both symbolic and acoustic data, creating a comprehensive training environment. This end-to-end learning enables systems to produce performances that closely replicate human musicianship, tapping into the intricacies of classical piano performances.

Data Cleaning and Preprocessing Techniques

Preprocessing is critical for refining the MAESTRO dataset into a usable format for deep learning models. This involves various techniques aimed at standardizing, cleaning, and enhancing data integrity.

-

Time Quantization: Implementing a uniform time framework for MIDI events is crucial. The formula used to adjust event timing ensures that all samples remain consistent throughout training.

-

Pitch Standardization: All MIDI sequences are transposed to a reference key, focusing on relative harmonics rather than fixed signatures. This enhances the model’s ability to generate versatile music.

-

Velocity Normalization: By scaling note velocities to a fixed range between 0 and 1, the model retains consistent dynamic representations, crucial for expressive music generation.

-

Duplicate Removal: Cleaning up redundant events from the MIDI files reduces clutter, ensuring high-quality input data.

- Audio Transformations: The raw audio FLAC recordings require resampling and spectrogram computation. The Short-Time Fourier Transform (STFT) and Mel filter banks are employed, generating time-frequency representations critical for subsequent analysis.

These preprocessing steps culminate in a well-structured dataset, ready for deep learning applications.

Deep Learning Architectures for Music Generation and Analysis

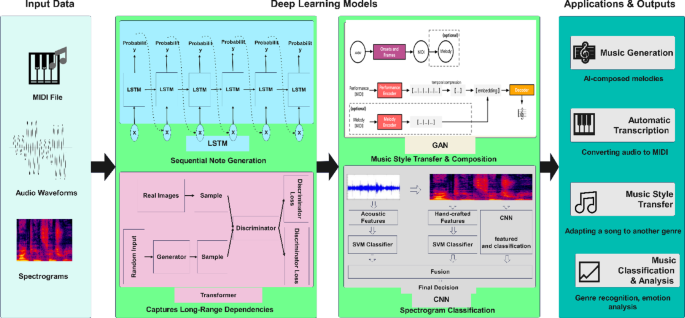

The transformative capabilities of deep learning in music generation and analysis hinge upon several architectural approaches, each suited to specific tasks.

LSTM Networks

Long Short-Term Memory (LSTM) networks excel in processing sequential data, making them particularly effective for melody creation and polyphonic representations. Through their hidden state updates, LSTMs maintain harmony over time, yielding coherent musical outputs.

Transformers

The introduction of Transformers marked a significant advancement over traditional RNN approaches. Utilizing self-attention mechanisms, Transformer’s ability to analyze long-distance dependencies allows it to identify complex relationships within musical sequences without being bound by sequential ordering.

Generative Adversarial Networks (GANs)

GANs offer a novel approach to music composition through adversarial learning. By concurrently training a generator to produce music and a discriminator to evaluate it, GANs refine AI compositions, pushing them closer to human-composed music.

Convolutional Neural Networks (CNNs)

CNNs are adept at processing spectrograms, making them effective for tasks like chord recognition, transcription, and genre classification. They excel in extracting hierarchical musical features from audio data, enhancing the overall analysis.

Training Pipeline and Hyperparameter Tuning

Training Pipeline

Establishing a robust training pipeline involves several critical steps:

- Data Preprocessing: The first stage consists of normalizing MIDI sequences and audio spectrograms.

- Data Splitting: Following preprocessing, the dataset is split into training (80%), validation (10%), and testing (10%) subsets.

- Optimization: Gradient-based optimization techniques, particularly using the Adam optimizer, are standard for maintaining stability during training.

Hyperparameter Tuning

Hyperparameter tuning is pivotal for enhancing model performance. This involves refining learning rates, batch sizes, and sequence lengths:

- Learning rates typically range from (1e^{-4}) to (1e^{-3}).

- Batch sizes often span from 32 to 128.

- Employing regularization techniques, such as dropout (between 0.2 and 0.5) and L2 weight decay, helps prevent overfitting.

Training is closely monitored through validation loss and accuracy metrics, often integrating early stopping mechanisms to optimize convergence.

Evaluation Metrics for AI-Generated Music

When evaluating AI-generated music, both quantitative and qualitative assessment dimensions come into play. Unlike typical ML tasks, music evaluation requires nuanced analyses of harmonic structure, temporal coherence, and emotional resonance.

Objective Metrics

- Perplexity (PPL): This metric assesses sequence modeling, where lower perplexity indicates better music sequence prediction outcomes.

- Pitch Class Histogram (PCH): This examines the pitch distributions in generated music.

- Rhythmic Entropy: Measures variation in note durations, ensuring that the music produced is engaging rather than monotonous.

Subjective Metrics

Human judgment remains essential in music evaluation. Listening tests gauge how well AI-generated compositions resonate emotionally with audiences. Metrics like the Mean Opinion Score (MOS) assess elements like coherence and expressiveness.

The Turing Test Success Rate evaluates how effectively listeners can discern between human and AI compositions, with higher rates indicating improved realism and creativity of AI-generated music.

By integrating sophisticated deep learning methodologies with a rich dataset like MAESTRO, researchers continue to drive advancements in AI music generation and transcription. This profound intersection of technology and art promises a future where the creative landscape is vastly enriched by AI’s capabilities.