Mamba-based ViT Network for Mural Image Segmentation: A Deep Dive

Introduction

In recent years, the field of image segmentation has experienced significant technological advancements, with Vision Transformers (ViT) gaining traction as an innovative means of handling complex visual tasks. This article explores a novel Mamba-based ViT network designed specifically for mural image segmentation. Through its sophisticated architecture, combining a hybrid encoding layer with a multi-level pyramid spatial-convolution (SCconv) decoding layer, the proposed network optimally extracts and reconstructs image features, producing precise segmentation masks.

Overview of the Proposed Architecture

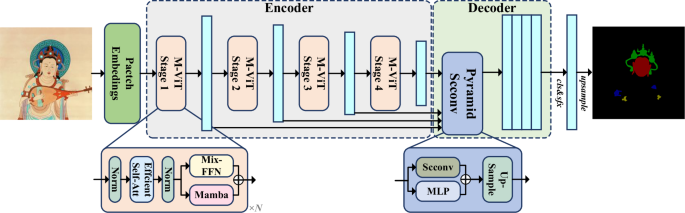

The overall structure of our mural image segmentation network comprises two main components: the M-ViT encoder and the Pyramid SCconv decoder.

Architecture Illustration

As depicted in Figure 1, the architecture begins with feature extraction through the M-ViT units, followed by reconstruction via the Pyramid SCconv modules.

Encoding Phase

In the encoding phase, incoming images are partitioned into patches, which are then transformed into a linear sequence. The process involves four distinct downsampling stages, each featuring N M-ViT units. This hierarchical structure enables deeper insight into the image by capturing varied semantic features at different scales.

Decoding Phase

Once feature extraction is complete, the Pyramid SCconv decoder performs convolutional reconstruction on outputs from four scale-intermediate layers. This multi-level approach effectively integrates spatial and channel dimensions, culminating in a unified scale suitable for pixel-wise classification and ultimately leading to an accurate mask output.

M-ViT Encoder

The core of the encoder is the M-ViT unit, which plays a pivotal role in feature mapping essential to semantic segmentation tasks. As seen in Figure 2, the M-ViT unit comprises three essential modules: Efficient Self-Attention, Mix-FFN in a dual-stream branch, and the Mamba module.

Efficient Self-Attention

At different encoding stages, the feature map passing through the Efficient Self-Attention module enhances long-range dependencies with reduced computational demands. By employing a linear computation approach, it achieves performance levels similar to those of traditional dot-product transformers but with significantly lower computational costs.

Mix-FFN and Mamba Module

The dual-branch structure includes the Mix-FFN module, which effectively incorporates positional information and enhances nonlinear mapping through GELU activations, while the Mamba module addresses serializing discrete data, facilitating efficient context representation and computational efficiency.

Pyramid SCconv Decoder

Transitioning to the decoding phase, the Pyramid SCconv Decoder aims to extract high-quality segmentation results from the encoded feature maps.

Structure and Function

Each SCconv module includes two critical components: a spatially separable gating module (SSGM) that manages spatial information and a channel-separable convolution module (CSCM) that processes channel-related redundancy. As shown in Figure 3, the architecture facilitates effective multi-scale feature fusion and upsampling decoding.

Feature Processing

When processing a given two-dimensional feature map, normalization is performed first to maintain stability and improve learning. The importance weights calculated through the SSGM play a vital role in suppressing non-essential information and reducing redundancy, ultimately enhancing the quality of the output segmentation.

Loss Function

A well-rounded optimization strategy is crucial for achieving accurate segmentation results. To this end, a composite loss function combines Dice loss and Cross Entropy Loss.

Dice Loss

The Dice coefficient functions as a measure of similarity between the predicted segmentation and the ground truth. This metric is particularly valuable in assessing class imbalances typical in segmentation tasks.

Cross Entropy Loss

Complementing the Dice loss, Cross Entropy Loss evaluates discrepancies between predicted classes and the actual distribution, ensuring a balanced approach to classification.

Combined Loss Function

The ultimate loss function combines these two metrics, weighted by an empirical λ (0.2 in our study), enabling the model to effectively balance overall accuracy with fine-grained segmentation capabilities.

This innovative Mamba-based ViT network exemplifies a sophisticated approach to mural image segmentation, marrying contemporary methods with practical, real-world applications in visual computing. Each component and module of this architecture has been painstakingly designed to enhance performance, offering promise in the ongoing challenge of image segmentation.