VADGAT: Advancements in Aspect-Based Sentiment Analysis

In recent years, the field of sentiment analysis has evolved significantly, particularly within the realm of Aspect-Based Sentiment Analysis (ABSA). This article delves into a novel approach known as VADGAT (Valence, Arousal, and Dominance Graph Attention Network), exploring its benchmarks, experimental settings, and the effectiveness of its components.

Benchmarks for Validation

To substantiate the effectiveness of VADGAT, rigorous experimentation was conducted across five widely recognized benchmarks: Restaurant14 (Rest14), Restaurant15 (Rest15), Restaurant16 (Rest16), Twitter, and Laptop14 (Lap14). Each dataset has been carefully curated from reliable sources such as SemEval competitions and Twitter, which allows for a diverse and expansive set of sentiment labels. For clarity, a detailed statistical overview of sentiment labels across these datasets is provided in Table 1.

Experimental Settings

The experiments were facilitated using the Pytorch platform, with computational resources powered by a robust 12GB RTX 3080Ti GPU. To prepare the dependency matrix of input sentences, SpaCy was employed, substantially enhancing the model’s capacity to interpret syntactic structures. VADGAT was trained over a span of 30 epochs, with model performance evaluated through standard metrics such as Accuracy and F1 scores, allowing for a comprehensive evaluation of the model’s efficiency in handling ABSA tasks.

State-of-the-Art Baselines

To validate VADGAT’s performance accurately, several state-of-the-art (SOTA) baselines were selected for comparison. These include methodologies based on Long Short-Term Memory Networks (LSTM), BERT, and RoBERTa. Key baselines include:

- R-GAT: This architecture applies a relational graph attention framework to construct trees from original dependency structures.

- DGEDT: Utilizes both flat and graph-based representations to exploit the strengths of Transformers and dependency graphs.

- DualGCN and Hete-GNNs: These approaches aim to exploit syntactic and semantic correlations concurrently, illuminating the interconnectedness of sentiment features in text data.

- Various hybrids of BERT and RoBERTa: Approaches such as R-GAT+BERT and RGAT-RoBERTa combine graph attention networks with pre-trained language models, showcasing the versatility of integrating multiple methodologies.

Main Results

Upon extensive experimentation, the results demonstrated a significant advantage for VADGAT against the chosen baselines. For instance, VADGAT achieved 83.07% Accuracy and 80.10% F1 score on the Lap14 dataset, showcasing its capability in accurately predicting sentiment polarity. Specifically, the model’s performance metrics outstripped those of the closest competing approach, RSSG+BERT, on Rest16, further solidifying VADGAT’s strong position in the field.

Interestingly, while VADGAT consistently excelled across most datasets, it displayed less robust performance on Rest14. This discrepancy highlights a critical insight: the methodology’s effectiveness can fluctuate depending on the dataset’s characteristics.

Ablation Study: The Impact of V, A, and D

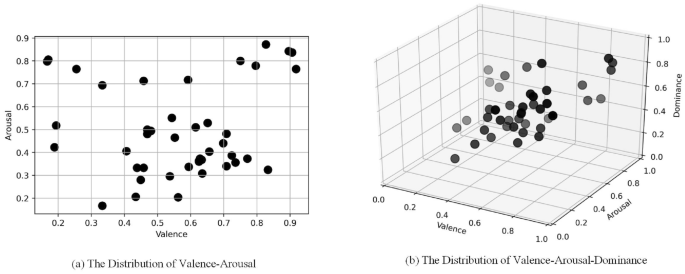

A pivotal component of VADGAT is its incorporation of sentiment dimensions: Valence (V), Arousal (A), and Dominance (D). An ablation study analyzed the contribution of these three dimensions to sentiment inference. The findings suggested that each dimension plays a significant role in enhancing VADGAT’s performance, although their optimal configurations may vary across datasets. For example, certain datasets showed optimal results when combining specific dimensions, indicating that flexibility in dimensional integration is essential for maximizing model performance.

Robustness of VADGAT

To test the generalization of VADGAT, experiments utilized various contextual representations from different pre-trained language models, including RoBERTa, BERT, GPT, and BART. Results indicated that RoBERTa provided the most significant boost to VADGAT’s performance, albeit suggesting that the model is still relatively tethered to specific PLM configurations, necessitating further experimentation for broader applicability.

Hyperparameter Exploration

Hyperparameter tuning is key to optimizing VADGAT’s performance. This includes examining parameters such as the ratios of original feature contributions, JS rate, batch size, optimizer selection, learning rate, and dropout values. Each of these elements warrants careful consideration as their adjusted settings significantly influenced the model’s learning efficacy.

- Ratios of Original and Twin Features: Experiments revealed the necessity for carefully calibrated contributions from original features and their dual counterparts, suggesting a nuanced approach in defining optimal ratios across datasets.

- Batch Size: Identifying a batch size of 8 enabled VADGAT to effectively learn from input data, facilitating nuanced sentiment extraction.

- Optimizer Effectiveness: The choice of optimizer was pivotal, with Adam showing superior performance across most contexts, confirming that optimizer selection is critical for enhancing model capacity.

Learning Rates and Dropout Effects

Explorations into learning rates elucidated that a lower learning rate (1e-5) yielded optimal results across the datasets, reinforcing the idea that fine-tuning needs to maintain a delicate balance. Moreover, dropout values exhibited a varying impact based on the dataset, revealing that the optimum values required adjustments to enhance the model’s robustness effectively.

Case Studies and Error Analysis

Through a case study analysis featuring various sentiment labels, the predictive capacity of VADGAT was assessed. Positive and negative sentiments were consistently predicted with high accuracy, while neutral labels posed more challenges. The attention visualization provided insights into how the model formed sentiment judgments, highlighting instances where external knowledge led to potential over-inference, particularly in neutral cases.

Discussion and Limitations

Despite demonstrating considerable advancements, limitations of VADGAT persist. The reliance on a singular prompt template to synergize with PLM capabilities suggests an area for future exploration—evaluating the impact of diverse templates could significantly enrich VADGAT’s efficacy. Furthermore, the potential conflict between sequential injections of sentiment knowledge presents a challenge, underlining the need for a more structured approach in leveraging external sentiment insights.

VADGAT represents a significant leap in the integration of nuanced affective knowledge within sentiment analysis frameworks, offering insights for further research and development in the field of natural language processing. As the landscape of sentiment analysis continues to evolve, understanding the mechanisms and implications behind models like VADGAT will be crucial for leveraging their full potential.