Insights into Data Preparation for Predictive Heart Disease Models

Feature Selection and Data Management

When embarking on a project aimed at predicting heart diseases, initial steps often encompass crucial procedures such as feature selection, handling missing data, outlier detection, data normalization, discretization, and effective data visualization. Each of these elements plays a pivotal role in refining the dataset used for model training. For instance, a thoughtful approach to feature selection ensures that only the most relevant attributes contribute to the predictive power of the model.

Management of missing data is equally important, as incomplete datasets can lead to biased predictions. Techniques such as mean imputation or advanced methods like multiple imputations can be employed to address this issue. Furthermore, identifying and managing outliers is essential because they can skew results, potentially leading to inaccurate assessments of heart disease risk.

On the visualization front, employing graphical tools like box plots or scatter plots can greatly enhance feature recognition. Effective visualization allows researchers to quickly interpret complex datasets, identifying trends and patterns that inform subsequent modeling efforts.

Data Identification Phase

In the data identification phase, the focus shifts towards acquiring comprehensive information about heart and cardiovascular disorders. This requires a dual evaluation approach: one that considers both medical perspectives and the applicability of technological advancements. A dataset must not only be rich in relevant clinical details but should also align with the technological frameworks established for predictive modeling.

The Cleveland Clinic heart patients dataset serves as an excellent case study. Extensive research on this dataset reaffirmed its suitability for developing predictive models for heart disorders. Given that the data reflects real-world categorizations by medical professionals—labeling patients as either sick or healthy—this dataset offers a solid foundation for deriving valid predictive insights about heart diseases.

Data Preparation Phase

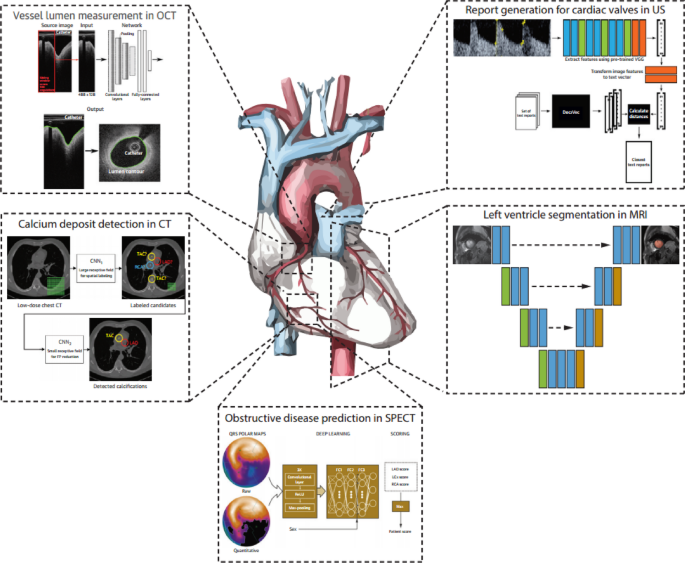

The preparation of data draws on clinical features derived from established datasets like Cleveland UCI and image features captured from cardiac ultrasounds. These two sources of data, clinical metrics such as blood pressure and cholesterol levels, along with image-derived features (like cardiac region area and mean pixel intensity), form a composite dataset distinct in its richness and relevance.

Tables comparing these features elucidate how clinical characteristics are grounded in tangible medical data, while insights from image processing techniques such as Fully Convolutional Networks (FCN) and U-Net models further augment the dimensionality of available data. Figures from the research illustrate this blend of clinical and imaging data, cementing how diverse sources contribute to a robust predictive model.

Univariate Outlier Data

Univariate outlier analysis provides an initial layer of inspection wherein outliers are identified based solely on individual variables, utilizing graphical representations like box plots. These plots depict essential statistical metrics, highlighting discrepancies that warrant further investigation.

Data beyond established bounds—defined by the intricate relationship between quartiles—render themselves as outlier data. Understanding these outliers is crucial, with a strategy in place to manage them based on their medical context. For instance, if outlier values fall within clinically significant ranges among patients statistical approaches will retain them, while healthy individuals’ values may be adjusted to align with normative ranges.

Multivariate Outliers

As the study progresses, it is essential to adopt a multivariate approach for outlier detection. The Mahalanobis distance emerges as a useful metric, likening Euclidean distance but adjusting for variations in multidimensional datasets. This method helps identify how far individual observations are from the population mean.

In practical application, this yields identification and exclusion of instances that deviate significantly from expectations. Visual representations, such as box plots illustrating the Mahalanobis distance across data classes, showcase the differentiation between typical observations and outliers with unmistakable clarity.

Numerical Data Normalization and Discretization

Normalization is imperative in preparing numerical data, particularly when input features exhibit diverse value ranges. The Min-Max normalization method has been employed to scale features uniformly between 0 and 1, ensuring consistent behavior during model training. This is particularly critical in a healthcare context where discrepancies in feature scales can lead to inefficiencies or inaccuracies in predictive outputs.

Complementary to normalization, data discretization transforms numerical features into categorical representations, making them more amenable to certain modeling techniques commonly utilized in heart disease diagnosis. This conversion, guided by medical context, ensures that key variables align with clinical insights.

Flowcharts detailing the pathway from data integration to preprocessing provide visual guidance through this complex procedure. The careful interplay between clinical and imaging data, supported by techniques like ResNet-50 for feature extraction, illustrates the effective aggregation of diverse insights illuminating heart disease diagnosis.

Visualization Techniques

As critical as raw data is, visualization acts as a bridge connecting complex statistical insights with comprehensible narratives. Through visual displays, like the segmentation of cardiac ultrasound images, it becomes evident how preprocessing improves image quality, enhances key feature isolation, and ultimately contributes to more accurate deep learning model training.

Such visualization not only assists in understanding the data’s intricate details, but also aids in communicating findings effectively to stakeholders or healthcare professionals, reinforcing the model’s implications in real-world healthcare settings.

Conclusion

With the rigorous processes including feature selection, normalization, and outlier management in play, the groundwork is firmly laid for the predictive modeling of heart diseases. Each component of data preparation plays a vital role in ensuring that the predictive outcomes are not only accurate but also clinically relevant.