Understanding Datasets in Multimodal Machine Translation

Datasets Collection

In the realm of Multimodal Machine Translation (MMT), the selection of datasets is crucial for training robust models. This experiment uses two prominent datasets: Multi30K and Microsoft Common Objects in Context (MS COCO).

Multi30K Dataset

Multi30K is celebrated for its diversity, consisting of image-text pairs across various domains. It’s a go-to dataset for tasks such as image caption generation and multimodal translation. The dataset supports three language pairs:

- English to German (En-De)

- English to French (En-Fr)

- English to Czech (En-Cs)

The training set of Multi30K includes:

- 29,000 bilingual parallel sentence pairs

- 1,000 validation samples

- 1,000 test samples

Each sentence in the dataset is paired with a corresponding image. This ensures that the textual description aligns with the visual content, providing a rich source of multimodal data for training models.

The specific subsets employed in our analysis are the test16 and test17 groups.

MS COCO Dataset

MS COCO is another rich dataset, containing a myriad of images along with their corresponding descriptions. It has garnered recognition as a standard benchmark for various tasks in computer vision and Natural Language Processing (NLP). Given its comprehensive semantic annotations, MS COCO is particularly adept at evaluating model performance in cross-domain and cross-lingual translation tasks.

Experimental Environment

To conduct the experiments, we utilize Fairseq, an open-source toolkit built on the PyTorch framework. Fairseq is widely adopted in NLP tasks, especially for constructing and training machine translation models.

Its flexibility in supporting various model architectures—ranging from Recurrent Neural Networks (RNNs) to Convolutional Neural Networks and Transformers—allows researchers to enhance performance in MT tasks effectively.

With Fairseq, building the experimental model framework is straightforward. The toolkit not only supports efficient parallel computing but also optimizes training workflows for large-scale model training.

Parameter Settings

Numerous parameters govern the experimental setup, which can be found in Table 1 (not shown here). Each parameter is meticulously chosen to ensure coherent and efficient model training.

Evaluation Metrics

To gauge the performance of the FACT model comprehensively, we employ two well-respected evaluation metrics: Bilingual Evaluation Understudy (BLEU) and Meteor. Both are critical automated evaluation tools in current machine translation research.

BLEU calculates translation quality by measuring n-gram matches between translated and reference texts. Its formula is formulated to avoid favoring shorter translations and calculates a brevity penalty (BP) for translations that are too brief.

Meteor, contrastingly, adopts a word alignment-based approach to assess translations, thereby considering semantic information and word order significantly more than BLEU. It calculates precision and recall through matched words between the candidate and reference translations.

By using both BLEU and Meteor, we ensure a holistic evaluation of the FACT model’s performance—addressing both the formal accuracy and semantic acceptability of the translations produced.

Performance Evaluation

Comparison of Model Performance

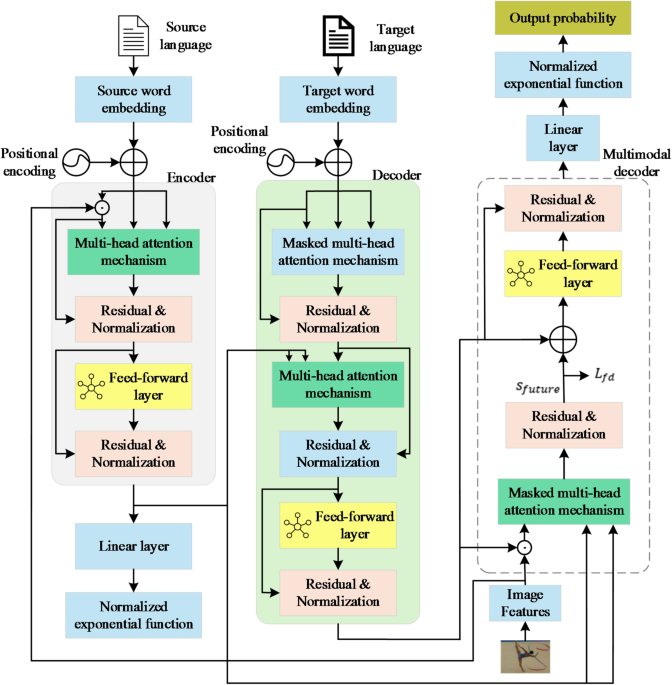

In assessing the effectiveness of the FACT model in MMT tasks, we compare its performance against five notable baseline models:

- Transformer: A classic architecture in machine translation, serving as a simple text baseline model.

- Latent Multimodal Machine Translation (LMMT): Utilizes latent variables to probe multimodal interactions.

- Dynamic Context-Driven Capsule Network (DMMT): Enhances semantic coupling between modalities during translation.

- Target-modulated Multimodal Machine Translation (TMMT): Incorporates visual information in translation under a target modulation mechanism.

- Imagined Representation for Multimodal Machine Translation (IMMT): Attempts to formulate intermediate image representations.

While we recognize the prowess of advanced multimodal language models like GPT-4o and LLaVA, they were excluded from this study due to considerations surrounding closed-source access, resource intensiveness, and our model’s emphasis on structural lightness and training efficiency.

In Fig. 3, the results highlight that the FACT model consistently outperforms its competitors in both BLEU and Meteor scores across different translation tasks—emphasizing its effectiveness in MMT.

A detailed statistical analysis, outlined in Table 2 (not shown here), provides p-values which emphasize the statistical significance of FACT’s performance advantage when compared to the baseline models.

Ablation Experiment

To provide insight into how the FACT model enhances translation performance via integration of visual features, several variant models are created. These experiments are crucial for understanding the interaction between different components in the FACT model.

The Figs. 4, 5, and 6 reveal insights on how model performance varies when certain functions are disabled, demonstrating the robust nature of the FACT model even in reduced configurations.

Sentence Length Impact

An intriguing aspect of language translation models is their response to sentence length. In observations from Fig. 7, as source language sentences increase in length, the FACT model maintains a clear advantage over the Transformer, showcasing heightened translation quality especially for longer sentences by generating translations that are contextually coherent.

Learning Investment Effect

In exploring the efficacy of the FACT model in language learning applications—a key area for enhancing educational approaches—performance comparisons are made against the Transformer model. As seen in Fig. 8, the FACT model showcases superior learning efficiency, translation quality, and user satisfaction, indicating its potential to significantly enhance language learning outcomes.

This structured experimentation and comprehensive evaluation of the FACT model promises fruitful advancements in the methodologies underpinning Multimodal Machine Translation, opening avenues for further exploration and application in the field.