Global Channel-Spatial Attention (GCSA) in Convolutional Neural Networks

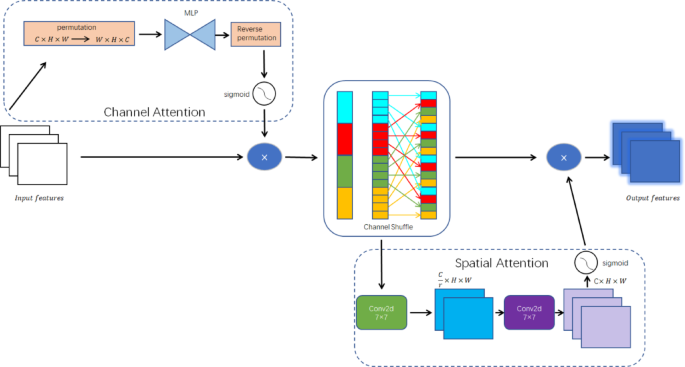

The evolution of Convolutional Neural Networks (CNNs) has witnessed impressive strides in feature extraction and representation. Yet, traditional CNNs frequently overlook the intricate relationships between channels, which play a pivotal role in capturing global information. This oversight can restrict the model’s effectiveness in leveraging the latent knowledge within feature maps, ultimately hampering its ability to extract global features effectively. To combat this limitation, we introduce the Global Channel-Spatial Attention Module (GCSA). This innovative module is designed to bolster the expressive power of input feature maps by deftly capturing global dependencies via a synergy of channel attention mechanisms, channel shuffle operations, and spatial attention mechanisms.

Channel Attention Mechanism

At the heart of our GCSA design lies the channel attention submodule, which enhances inter-channel dependencies. This is accomplished through a Multilayer Perceptron (MLP) architecture that effectively reduces redundant information across channels while accentuating critical features. The initial feature map undergoes a dimensional permutation, converting its format from (C \times H \times W) to (W \times H \times C). This transformation devices the channel dimension as the last in the sequence.

The operations are defined as follows:

[

F{perm} = P(F{input}) \in \mathbb{R}^{H \times W \times C}

]

Next, we simulate inter-channel dependencies using a two-layer MLP framework. The first MLP layer compresses the channel count to one-fourth of the original, employing the ReLU activation function for introducing nonlinearity. The second MLP layer subsequently restores the channel dimensions. The weight matrices (W_1) and (W_2) have dimensions (W_1 \in \mathbb{R}^{\frac{C}{r} \times C}) and (W_2 \in \mathbb{R}^{C \times \frac{C}{r}}) (with a reduction ratio (r = 4)). This bottleneck structure adeptly captures channel-wise relationships as follows:

[

\mu_c = \sigma(W_2 \cdot \delta(W1 \cdot F{perm}))

]

By strategically placing a reduction ratio, we enhance channel compression without compromising crucial channel information during processing. The restored feature map returns to its original dimensions through an inverse permutation:

[

F{channel} = F{input} \otimes P^{-1}(\mu_c)

]

This concludes the channel attention phase, producing an enriched feature map through effective channel-wise scaling.

Channel Shuffle Operation

To further amplify the interaction among feature channels, we incorporate a channel shuffle operation. While channel attention effectively highlights important features, it may still leave information unintegrated among channels. Thus, we group the enhanced feature map into groups (for instance, four), each containing (C/4) channels. A transposed operation then shuffles the channel order within each group, optimizing information mixing and bolstering feature representation.

The mathematical operations are articulated as:

[

F{grouped} = Reshape(F{channel}, (B, g, C/g, H, W))

]

[

F{shuffle} = Transpose(F{grouped}, 1, 2) \in \mathbb{R}^{B \times C/g \times g \times H \times W}

]

This channel shuffle method facilitates greater information interaction across channels, augmenting overall feature representation while retaining the benefits realized through channel attention.

Spatial Attention Mechanism

In complement to the preceding processes, the spatial attention submodule is designed to tap into the spatial intricacies of the feature map. While the channel attention and shuffle operations effectively depict inter-channel relationships, neglecting spatial dimensions can culminate in missed crucial details. This shortfall limits the model’s performance in tasks that require nuanced understanding of both local and global features.

The spatial attention operates through two 7×7 convolutional layers. Initially, the feature map undergoes a convolution that reduces its channel count to a quarter of the original. Following a non-linear transformation via batch normalization and the ReLU activation function, a second convolution restores the channel size, concluding with the generation of a spatial attention map:

[

F{mid} = BN(ReLU(Conv{7\times7}(F_{shuffle}; W1)))

]

[

F{spatial} = \sigma(BN(Conv{7\times7}(F{mid}; W_2)))

]

The utilization of 7×7 kernels permits modeling relationships across a broader spatial context, capturing long-range dependencies without requiring multiple layers. This approach effectively mitigates the risk of abrupt transitions and segmentation within attention distributions.

Output Feature Map

Combining the outputs from the channel attention, shuffle, and spatial attention processes yields a final output feature map. This output not only reflects enhanced features collected from the aforementioned steps but also facilitates superior model performance in applications such as malware detection. Here, the feature map represents land characteristics of processed inputs, feeding into subsequent analysis and classification tasks.

Mechanisms Preventing Feature Degradation

The architecture employs various strategies to prevent feature degradation, preserving essential information throughout transformations:

-

Feature Enhancement via Multiplication: Using multiplicative operations rather than replacements allows the network to trace back to its initial features, safeguarding essential information.

-

Progressive Feature Transformation: By employing MLPs for dimension reduction with batch normalization and nonlinear activations, features retain expressiveness compared to direct linear transformations.

- Channel Shuffle Operation: This mechanism rearranges feature channels, enabling information interchange and mitigating the risk of long-term channel suppressions, fostering a continuous flow of information.

Comparison with CBAM

The GCSA distinguishes itself from the Convolution Block Attention Module (CBAM) on several fronts:

Channel Attention

CBAM employs dual pooling to aggregate statistical information, while GCSA utilizes a more streamlined linear layer with reduced complexity.

Spatial Attention

GCSA directly applies convolutions to multi-channel feature maps, preserving a richer information landscape, as opposed to CBAM’s pooling-based approach.

Channel Shuffling

GCSA uniquely integrates channel shuffling, promoting cross-channel interactions in a manner that fosters versatile feature propagation.

GCSA-ResNet Integration for Malware Detection

For malware detection, we implemented the GCSA module atop the ResNet50 architecture. This ResNet framework, characterized by bottleneck residual blocks, adeptly addresses gradient vanishing and degradation challenges encountered in deeper networks. Integrating GCSA with the ResNet50 enhances the model’s ability to refine features from visualized malware images, distinguishing crucial textures effectively.

This integration effectively emphasizes local features corresponding to malware characteristics, fundamentally excelling in classification tasks.

Stem Module

The Stem module carries out the initial feature extraction using a 7×7 convolution (stride=2) followed by max pooling. Within this stage, we incorporate the GCSA module, further refining input features by incorporating channel attention and spatial attention.

Body Module

The Body consists of stacked Residual Blocks, which progressively extract features while utilizing skip connections, promoting efficient optimization.

Head Module

Finally, the Head module comprises global average pooling, followed by a fully connected layer to yield classification outputs, ensuring a cohesive end-to-end architecture for effective malware analysis.

In summary, the Global Channel-Spatial Attention mechanism represents a significant advancement in feature processing within CNNs, particularly for specialized applications in malware detection. The framework is engineered to maximize feature representation and channel interconnectivity, thereby improving classification accuracy within this critical area.