A Deep Dive into the Architecture and Functionality of MCRFS-Net for Image Dehazing

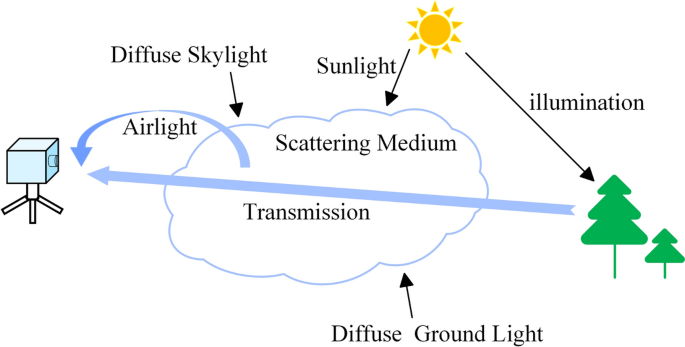

In the world of image processing, clarity is often obscured by environmental factors such as fog, haze, or smoke, impacting our ability to see the true aesthetic of a photograph. Enter MCRFS-Net, a sophisticated architecture specifically designed for single-image dehazing, employing a multi-input, multi-output U-shaped encoder-decoder structure to enhance image quality. Let’s embark on a detailed exploration of the various components of this innovative network: from its overall architecture to its core components, adaptive multi-scale modules, and the nuanced loss functions that guide its learning process.

Overview of the Architecture

At the heart of MCRFS-Net lies a U-shaped architecture that consists of an encoder and a decoder. This design draws inspiration from previous advancements in the field, such as works highlighting multi-axis MLPs and deep residual Fourier transformations.

In the encoder phase, MCRFS-Net employs multiple downsampled degraded images, enabling robust handling of varying blur levels. The extracted feature maps undergo integration in three distinct Down modules along its main path, boosting flexibility and representational capability. Each module incorporates several residual blocks, with the Adaptive Multi-Scale Module (AMSM) embedded within the last of these blocks, where a crucial component, the Frequency Selection Block (FSB), resides.

Moving into the decoder stage, outputs from each Down module directly feed into their corresponding Up modules to generate a dehazed image. Through this structure, MCRFS-Net is adept at leveraging its multi-input, multi-output capabilities to effectively enhance dehazing performance, culminating with a custom multi-scale contrast regularization loss function that sharpens the final results.

Adaptive Multi-Scale Module (AMSM)

Encapsulating the adaptive multi-scale learning aspect, the AMSM addresses a ubiquitous limitation in existing models: the ineffectiveness in processing data across diverse scales. A noteworthy concept in the AMSM is its weighted fusion approach, which harmonizes feature maps instead of merely aggregating them. This clever method fosters more effective integration while maintaining low computational overhead.

The AMSM operates by feeding the input feature map through average pooling with varying downsampling rates and convolution layers, capturing robust multi-scale features. Afterwards, through the frequency selection module, these features are cleverly fused using a weighted approach before being forwarded to subsequent branches. This allows the model to efficiently learn multi-scale features while simultaneously improving its generalization ability.

Frequency Selection Block (FSB)

Like a precision instrument fine-tuning its sound, the FSB separates image features into high-frequency and low-frequency components for independent processing before merging them back together. The architecture of FSB embodies two primary elements: the frequency separator and the frequency fuser.

The frequency separator first decomposes the input features, subsequently extracting global information. It generates weighted coefficients, enhancing the selection of crucial global features for effective context comprehension. Specifically, it uses convolutional operations followed by a Softmax function to create low-pass filters.

Conversely, high-pass filters derive their functionality from the low-pass filters, enabling the model to extract essential frequency information effortlessly. The real magic happens within the frequency fuser, which calculates channel-wise weights through fully connected layers and Softmax activation, ensuring relevant interactions between different channels. The final output efficiently combines the low-frequency and high-frequency information, leading to superior feature representation.

Multi-Scale Contrastive Regularization (MSCR)

The MSCR introduces an innovative mechanism to optimize the dehazing process through contrastive regularization. In this schema, the dehazed image generated by the network serves as the anchor, while the actual dehazed image is the positive sample. Negative samples consist of the original hazy image and outputs from pre-trained networks.

MSCR strives to minimize the L1 loss between the anchor and the positive samples while simultaneously maximizing the L1 distance from negative samples. This design promotes a tangible lower bound in the solution space, making the process efficient.

By employing a multi-scale network architecture, MSCR allows the generation of multi-scale hazy images within the network, eliminating reliance on external networks and significantly reducing computational costs. Furthermore, it enforces consistency across required scales, preventing distortions that might occur due to scale variation.

Loss Functions

To derive high-quality dehazed images, MCRFS-Net incorporates various loss functions into its overall framework. The L1 loss function serves as a fundamental anchor, effectively minimizing pixel-level differences between the generated and real images.

Additionally, a frequency domain loss component is crucial to refining the model’s capability further. This aspect leverages convolutional kernels to simulate filtering in the frequency domain, thus enhancing performance and fidelity of the output images. The comprehensive loss function integrates spatial, frequency, and regularization elements, neatly balancing contributions to create a robust dehazing solution.

By summarizing the loss components, MCRFS-Net can effectively navigate discrepancies in spatial features while also addressing subtleties present in frequency-based representations.

Through this intricate design and intelligent integration of components, MCRFS-Net sets itself apart as a formidable entity in the realm of image dehazing. Its innovative architecture, paired with finely-tuned modules, enables a nuanced approach to overcoming the challenges posed by haze-ridden images, thereby enhancing our visual experience.