Exploring IvyGPT: A Revolutionary Tool in Healthcare AI

In recent years, the intersection of artificial intelligence (AI) and healthcare has become a rich field of exploration, promising to enhance diagnostic accuracy and improve patient care. A prime example of this innovation is IvyGPT, a specialized large language model (LLM) designed for use in the medical domain. In this article, we delve into the sophisticated architecture of IvyGPT, the technology behind it, and how it integrates with cutting-edge retrieval and generative mechanisms to advance healthcare applications.

IvyGPT: A Healthcare-Specialized LLM

IvyGPT was introduced by Wang et al. and is built upon the LLaMA architecture, aiming to address the inadequacies of traditional LLMs like ChatGPT in medical applications. These traditional models often lack accuracy and suitability for specialized medical queries, which can impact patient care. To overcome these challenges, IvyGPT underwent rigorous training leveraging high-quality medical question-and-answer datasets and was further refined through reinforcement learning with human feedback (RLHF). This meticulous approach empowers IvyGPT to engage in extended, informative dialogues and generate detailed treatment plans that are well-aligned with medical best practices.

One of the standout features of IvyGPT is its employment of the QLoRA training technique. This innovative method enhances the model’s efficiency by facilitating finetuning of quantized LLMs, making IvyGPT a state-of-the-art tool specifically oriented towards healthcare. Additionally, the research team has contributed a unique dataset tailored for the Chinese healthcare context, propelling IvyGPT to show significant improvements over existing models in this domain. The implications of these enhancements suggest that IvyGPT can serve in a variety of capacities, including medical education, patient self-assistance, and consultation services, fostering improved precision and efficiency in medical diagnoses.

Enhancing Knowledge Retrieval with RAG

A crucial advancement in IvyGPT’s architecture is its integration of Retrieval-Augmented Generation (RAG). RAG operates by connecting a pre-trained neural retriever with a pre-trained sequence-to-sequence (seq2seq) model to form a comprehensive, end-to-end trainable framework. This structure not only boosts the system’s capacity to acquire knowledge but also allows it to harness external knowledge resources effectively, eliminating the need for additional training due to its pre-trained retrieval mechanism.

In this research, an innovative dual-search strategy has been implemented, utilizing both Elasticsearch and Chroma. This refined process enhances RAG’s retrieval capabilities significantly by employing a robust semantic ranking system through the ColBERTv2 model, ensuring refined retrieval of information that is both relevant and contextual.

Elasticsearch: Speeding Up Information Retrieval

Elasticsearch has gained traction as a vital technology in knowledge-centric chatbots, providing unparalleled speed and efficiency when handling vast textual repositories. Its real-time information updating capabilities make it indispensable for chatbots, allowing them to deliver accurate and timely content. By creating efficient indexes and conducting rapid searches across extensive datasets, Elasticsearch acts as the backbone for IvyGPT’s data retrieval processes, ensuring that users receive pertinent knowledge swiftly.

Chroma: Advanced Vector Similarity Search

On the other hand, Chroma introduces an advanced vector similarity search methodology, adept at processing large-scale text datasets. It employs a pre-trained text embedding model to convert documents into dense vectors, optimizing its indexing and search algorithms to swiftly pinpoint the most relevant documents. Chroma’s unique architecture can handle massive datasets with minimal latency, enhancing the retrieval process for IvyGPT by ensuring users receive the most contextually relevant responses.

ColBERTv2: Fine-Tuning Retrieval Precision

Building upon these foundational technologies, ColBERTv2 offers an advanced retrieval engine that effectively captures the contextual semantics of tokens through multi-vector representations. This model introduces innovative space optimization techniques, enhancing retrieval accuracy while reducing storage demands through the application of residual representations. By refining its supervisory mechanisms with residual compression and insights from cross-encoder systems, ColBERTv2 significantly elevates the performance of IvyGPT in determining the most semantically aligned search results.

The Structural Design of IvyGPT

The system architecture of IvyGPT is meticulously designed to handle the complexities inherent in specialized medical domains. In areas such as rare diseases, medical terminologies are often underrepresented in training data, making it challenging for traditional vector-based approaches to accurately capture their semantics. To tackle this issue, IvyGPT integrates Elasticsearch’s term-based search mechanism, allowing for the effective retrieval of even the most specialized and recent terminology.

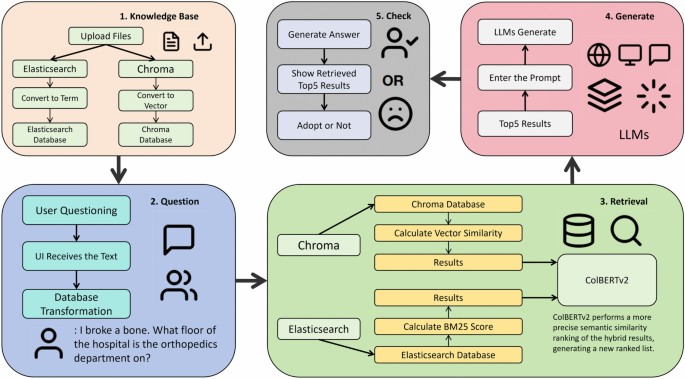

The entire system, organized into five interconnected modules—Knowledge Base, Question, Retrieval, Generation, and Check—ensures a fluid response mechanism. The Check module plays a critical role by validating the generated answers against the retrieved data, affirming their accuracy before presenting them to the user.

The Question Module: User Interaction at Its Core

The Question module is designed to facilitate seamless interactions with users, handling inquiries through a dynamic chat interface. Users can submit questions in free-form text, with the system providing real-time feedback. Upon submission, the user’s question is converted into a vector representation for the retrieval process that follows.

Effortless Retrieval Through Hybridity

In the Retrieval phase, IvyGPT deploys a hybrid methodology, seeking the vector representation of the user’s question in both the Chroma and Elasticsearch databases. While Chroma applies dense vector embeddings for semantic similarity, Elasticsearch utilizes traditional term-based search methods. Merging the results from both databases fosters a more nuanced retrieval process.

The merged results are then processed by the ColBERTv2 model, which re-ranks the information and selects the top five responses based on semantic alignment. These selected results serve as prompts for IvyGPT, leading to the generation of comprehensive answers.

User-Centric Output Presentation

In the final output stage, the system presents users with generated answers alongside the five most relevant search results. This design encourages users to cross-check the responses against the search outputs, fostering a sense of transparency and trust.

A Rich Knowledge Base

The knowledge base within IvyGPT encompasses a wide array of medical subspecialties, including fields such as Orthopedics, Pediatrics, and Ophthalmology. By curating extensive medical files sourced from a renowned Grade 3A comprehensive healthcare facility, IvyGPT serves as a repository of profound medical insights.

These files undergo preprocessing to generate vector representations suitable for storage in both Chroma and Elasticsearch, ensuring that retrieval is both efficient and contextually relevant. The comprehensive Hospital Departmental Guide is also included, detailing departmental expertise, unique disease attributes, and more.

Through this sophisticated design and integration of technologies, IvyGPT emerges as a groundbreaking solution in medical AI, poised to significantly enhance the capabilities of healthcare-focused conversational systems.