Translation of Ancient Books

Understanding the Challenges of Ancient Text Translation

Translating ancient texts, particularly those written in Classical Chinese, poses unique challenges requiring sophisticated model capabilities. To evaluate the efficiency of Multi-layered Language Models (MLLMs) in these translations, we focus on two core tasks: translating from Classical Chinese to English and from Classical Chinese to Modern Chinese.

Evaluation Metrics: Measuring Precision in Translation

To ensure our assessment is both comprehensive and objective, we employ two widely acknowledged quantitative metrics: Bilingual Evaluation Understudy (BLEU) and Character n-gram F-score (CHRF).

-

BLEU measures the overlap of n-grams between machine-generated translations and reference translations, providing insight into the translations’ quality.

- CHRF operates on a character level, capturing phrase coherence and contextual relevance, thereby complementing BLEU’s limitations.

The results of this evaluation, illustrated in Figure 3, offer a comparative analysis of various models’ performances across both translation tasks, providing a more precise basis for evaluating translation quality.

Comparative Performance of Different Models

According to our evaluation, the Qwen-omni-turbo model consistently outperformed others in both translation tasks, achieving the highest BLEU and CHRF scores for English translations and competitive scores for translations into Modern Chinese. This indicates its strong grasp of the nuances inherent in ancient Chinese linguistics. Following closely is GPT-4o, which excelled particularly in Modern Chinese translation, demonstrating a robust ability to generate coherent content.

Other models, such as Doubao-1-5-vision-pro-32k-250115 and GLM-4V-Plus-0111, also performed well but fell short of the top-tier models in translation accuracy. Interestingly, most open-source models lagged behind their closed-source counterparts in performance, though some still managed commendable results, particularly in specific metrics. However, the performance disparity highlights the significant challenges posed by the complexity of ancient Chinese compared to more contemporary forms.

Insights on Translation Difficulty: Ancient vs. Modern Languages

A notable finding from this evaluation is that nearly all models exhibited better performance when translating ancient texts into Modern Chinese than into English. This discrepancy largely stems from the linguistic and cultural continuity between modern and ancient Chinese, which allows for a more seamless translation process. Conversely, English poses challenges due to significant differences in expression, cultural context, and the historical backdrop of the two languages.

Recognition of Ancient Texts

The evaluation of ancient text recognition dives into two primary types of texts: photocopied and handwritten. This distinction allows us to assess model performance across a spectrum of writing styles, crucial for evaluating their effectiveness in processing historical documents.

Using BLEU and Recall-Oriented Understudy for Gisting Evaluation (ROUGE) metrics, we quantify the similarity between the model-generated text and the original. As demonstrated in Table 3, the recognition of photocopied texts generally yielded higher scores than handwritten ones, reflecting better clarity and uniformity in printed materials.

Challenges of Handwritten Texts

Handwritten ancient texts pose significant challenges due to their variable styles and layouts. For example, GLM-4V-Plus-0111 showcased the highest performance for handwritten text recognition, indicating a robust capability for adapting to noisy and unstructured visual patterns. However, many other models struggled significantly in this task, highlighting the inherent challenges presented by the variability of historical manuscripts.

Metrics for Performance Measurement

For an in-depth assessment, we use three crucial metrics: accuracy, recall, and F1 score. These metrics evaluate the model’s ability to recognize characters accurately and completely. As shown in Figure 4, the results show that models such as GLM-4V-Plus-0111 performed admirably in recognizing handwritten texts, while lower-ranked models, particularly those with smaller parameter sizes, fared poorly.

Image Captioning: A Deeper Understanding of Visual Content

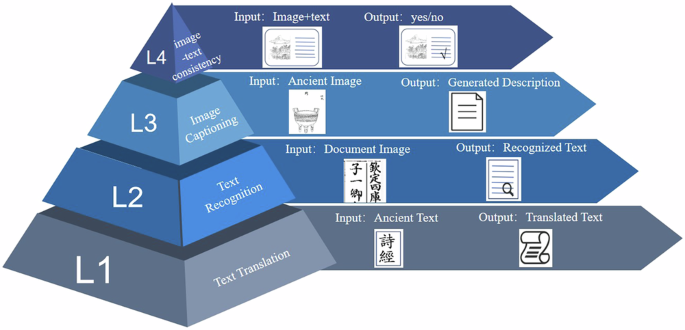

In addition to text recognition, we assess the performance of MLLMs in understanding images from ancient books through two tasks: image captioning and image classification. While image classification aids in identifying content, it lacks the depth to capture nuanced details inherent in ancient illustrations.

For image captioning, we apply BLEU and ROUGE metrics to evaluate the quality of generated descriptions against human-annotated reference captions. The results from this analysis, as depicted in Figure 5, indicate a relative struggle across the board, with most models producing lower scores in caption generation.

Consistency in Image-Text Relations

We also explore the ability of models to judge the consistency between images and their accompanying text. Using accuracy as the primary metric helps assess how well models discern whether visual content aligns semantically with the provided descriptions. Various models demonstrated a spectrum of capabilities in this task, with Qwen-omni-turbo and others achieving near-perfect consistency judgment rates.

Integrated Evaluation of MLLM Capabilities

Through rigorous evaluation across these facets of ancient text processing, we rank models in terms of their overall performance. The metrics are standardized to a score between 0 to 100, capturing their effectiveness across translation, text recognition, image captioning, and consistency judgment tasks.

Table 4 presents a comprehensive overview of model performance, emphasizing the top-ranking models like GPT-4o and Qwen-omni-turbo, displaying superior abilities in various tasks. Lower-ranking models highlight the need for further fine-tuning and architectural improvements to enhance their handling of ancient texts and images.

Visual Representation of Model Performance

To visually represent performance disparities, we generate bar charts for models that scored above 55. These charts, depicted in Figure 6, delineate each model’s relative strengths and weaknesses across multiple tasks, offering intuitive insights into their capabilities in understanding and processing ancient materials.

In summary, the ongoing evaluation of MLLMs reveals a complex landscape of performance variations, underscoring the nuanced interplay of language, text, and image recognition in the realm of ancient books.