Understanding Dataset Descriptions in Deep Learning for Radio Spectrum Sensing

In the rapidly evolving field of telecommunications, particularly with the advent of 5G and LTE technologies, precise and efficient radio spectrum sensing has become paramount. A fundamental aspect of enhancing spectrum sensing capabilities lies in the datasets utilized for training deep learning models. This article delves into the structure and elements of these datasets, elucidating their significance in developing robust spectrum sensing algorithms.

Components of the Datasets

The datasets leveraged in this research are essentially designed with three integral components:

-

Synthetic Training Data: This artificially generated data simulates various scenarios in a controlled environment. Employing MATLAB’s 5G Toolbox™ and LTE Toolbox™, the synthetic dataset consists of 128 × 128 spectrogram images. By using simulated New Radio (NR) and LTE signals, it ensures the training process has a foundation built on diverse signal patterns. Signals are then further adjusted to reflect realistic deployment conditions, including user mobility and frequency offsets. Each training frame spans 40 milliseconds and encapsulates either NR, LTE, or a combination of both, categorized into three semantic classes: LTE, NR, and Noise.

-

Real-World Captured Data: These datasets are sourced from real-time communications in existing 5G/LTE networks. This data, collected from RF receivers, reflects authentic signal conditions, noise, and interference. It plays a critical role in validating model robustness and addressing the potential domain shifts that could occur between the synthetic and real-world scenarios.

- Pre-Trained Model: Utilizing a pre-trained model significantly enhances training performance. By leveraging transfer learning, the DeepLabv3 + semantic segmentation architecture with a ResNet-50 backbone is applied. This approach, by building on features learned from large datasets, allows for faster model training and better generalization.

Synthetic Data Generation Techniques

The synthetic data achieves a realistic representation of network conditions by passing generated signals through channel models defined by the International Telecommunication Union (ITU) and the 3rd Generation Partnership Project (3GPP). This ensures the portrayal of practical propagation effects, vital in radio spectrum sensing.

Moreover, random shifts in both time and frequency domains help to emulate real-world practicalities. This variability is crucial as it allows the model to encounter diverse signal patterns, improving its adaptability and effectiveness in real-world applications.

Semantic Segmentation for Signal Identification

For effective training, pixel-wise semantic segmentation annotates the data. Each pixel in the spectrogram is labeled based on the presence and characteristics of signal energy in the time-frequency domain. Active signal regions are encoded according to their protocol type (LTE or NR), while non-signal areas are identified as Noise. Such meticulous labeling ensures models are trained to recognize and differentiate between classes effectively.

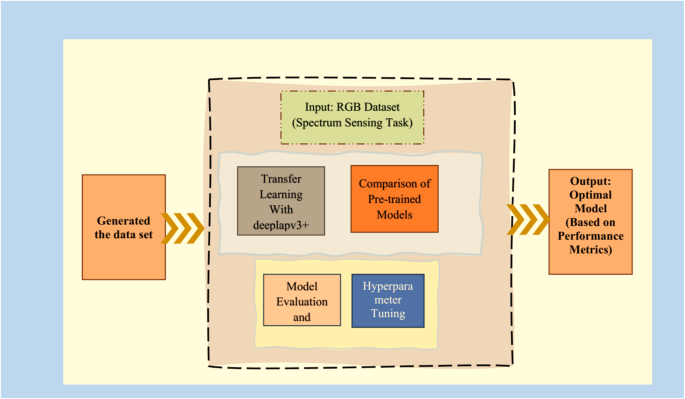

Training Approaches and Algorithms

The deep learning framework used in this research encompasses two primary approaches for semantic segmentation of the spectrograms. A custom network designed from scratch utilizing 128 × 128 RGB images demonstrates flexibility tailored to specific dataset characteristics. In contrast, transfer learning utilizing DeepLabv3 + exploits pre-learned features, which not only expedites the training process but also enhances model performance, particularly when only limited labeled data is available.

To manage the computational heft associated with training intricate models, cloud computing infrastructure is employed, enabling systematic hyperparameter tuning and dynamic workflows, making the model’s deployment in real-time scenarios feasible.

Dataset Splitting and Performance Evaluation

The dataset undergoes a meticulous division, with 80% allocated for training, 10% for validation, and the remaining 10% reserved for testing. This stratification ensures that the model is rigorously evaluated and fine-tuned throughout its training phase. Addressing class imbalances is crucial, achieved via class weighting techniques, to prevent biases that might skew performance results.

Performance Metrics Overview

The network’s performance is evaluated through several key metrics, including:

- Global Accuracy: The overall proportion of correctly classified pixels, which was found to be 91.802% on synthetic datasets.

- Mean Intersection over Union (IoU): Crucial for semantic segmentation tasks, it recorded a value of 63.619%, indicating potential areas for improvement.

- Weighted IoU: A more favorable metric at 84.845%, accounting for class imbalance.

- Boundary F1 Score (BF): At 73.257%, emphasizing the network’s performance in accurately segmenting different spectral content.

In evaluations using captured data, performance metrics improved significantly, showcasing global accuracies upward of 97.702%. The Mean IoU climbed to 90.162%, showcasing the model’s adeptness in handling varied real-world conditions.

Advanced Network Architectures

Various network architectures were assessed, including foundational models such as ResNet-18, deeper options like ResNet-50, and lightweight designs like MobileNetV2. Each of these models brought unique strengths:

- ResNet-18: Offers a straightforward yet effective approach with 18 layers connected by residual links to tackle the vanishing gradient problem.

- ResNet-50: Contains around 50 layers, making it suitable for deep learning tasks due to its efficient structure and significant performance on image classification.

- MobileNetV2: Prioritizes computational efficiency, particularly beneficial for mobile and resource-constrained environments.

Another noteworthy architecture is EfficientNet, designed to optimize performance by uniformly scaling depth, width, and resolution, leveraging advanced building blocks for superior processing capabilities.

Conclusion

The interplay of synthetic and real-world datasets, comprehensive training methodologies, and an array of meticulously evaluated network architectures culminates in a potent framework for radio spectrum sensing in complex communication environments. By understanding and leveraging each component’s strengths, researchers can push the boundaries of what is achievable in spectrum sensing, thus paving the way for the future of wireless communication technologies.