Datasets in ASL Recognition: An Insight into ASL Dataset and Sign-Language MNIST

Understanding the Importance of Datasets in ASL Recognition

In the realm of machine learning and computer vision, the quality and structure of datasets play a pivotal role in shaping the capability of models. This is particularly true for American Sign Language (ASL) recognition, where intricate gestures need to be accurately interpreted to foster effective communication within the deaf and hard-of-hearing community. The introduction of specialized datasets such as the American Sign Language Recognition Dataset and the Sign Language MNIST dataset mark significant advancements in this area.

ASL Dataset: A Cornerstone for Research and Innovation

The American Sign Language Recognition Dataset, curated by Rahul Makwana and available on Kaggle, serves as a fundamental resource in the exploration of visual-gestural languages. This dataset meticulously encompasses various hand and arm movements that define ASL, thereby crafting a foundation for developing machine learning algorithms that can proficiently interpret these gestures.

Structure of the Dataset

The ASL dataset is structured to facilitate both training and evaluation. Organizing a total of 56,000 training images and 8,000 test images, this dataset proves robust in catering to diverse linguistic expressions within ASL. Each gesture or letter is carefully labeled, establishing a strong base for supervised learning approaches.

This stratification not only enhances accuracy in predictions but also ensures that the models can generalize well across unobserved ASL gestures. Importantly, the dataset encapsulates hand signs for letters, digits, and punctuation marks—covering a wide array of communicative possibilities.

Sign-Language MNIST Dataset: A Benchmark Challenge

While the ASL dataset provides extensive avenues for research, the Sign-Language MNIST dataset establishes a challenging benchmark for image-based machine learning methods focused on ASL hand gestures. As a variation of the original MNIST dataset, it is designed to be accessible for developing assistive technologies aimed at the deaf and hard-of-hearing community.

The goal of these datasets extends beyond mere data collection; they function as evaluative tools to champion innovative approaches in machine learning and enhance user communication experiences.

Evaluation Metrics for Model Performance

When assessing the effectiveness of ASL recognition models, several key evaluation metrics warrant consideration:

-

Accuracy: Represents the rate of correct predictions made by the model.

[

Accuracy = \frac{TP + TN}{TP + TN + FP + FN}

]

where (TP) is true positives, (TN) is true negatives, (FP) is false positives, and (FN) is false negatives. -

Sensitivity (Recall): Measures a model’s ability to correctly identify positive cases in ASL gestures.

[

Sensitivity = \frac{TP}{TP + FN}

] -

Specificity: Assesses correctly identified negative cases and accounts for false positives.

[

Specificity = \frac{TN}{TN + FP}

] - Precision: Focuses on the ratio of true positives to all positive predictions made by the model.

[

Precision = \frac{TP}{TP + FP}

]

These metrics collectively provide a well-rounded view of how effectively a model can recognize gestures in ASL and help to identify areas for improvement.

Experimental Results & Insights

Statistical Analysis for ASL Dataset

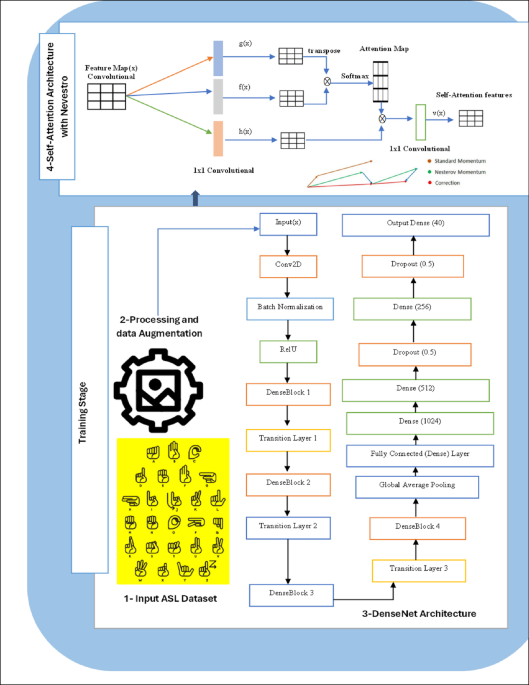

The performance of the proposed Sign Nevestro Densenet Attention (SNDA) model is rigorously examined through various statistical analyses. Visualization techniques, such as Kernel Density Estimation (KDE) plots and violin plots, enhance the understanding of metric distributions, showcasing the model’s accuracy and loss characteristics during the training process.

The learning curves provide further insight into how well the model learns over time, reflecting the training dynamics while highlighting potential view of overfitting or underfitting scenarios.

Comparative Analysis with Established Models

The experimental results portray a formation of comparative insights between SNDA and traditional models like InceptionV3 and ResNet when evaluated on both the ASL and Sign-Language MNIST datasets. During these comparisons, statistical evaluation reveals that SNDA consistently outmatches others in stability, accuracy, and generalization ability.

Insights into Sign-Language MNIST Dataset Performance

Adopting a unique approach for the Sign-Language MNIST, experiments involving various optimizers highlight not only model performance but also the efficiency of optimizer choices. The results point toward faster convergence for the Nadam optimizer compared to its contemporaries, emphasizing the importance of effective hyperparameter tuning in achieving optimal model performance.

Figures detailing training accuracies and convergence behaviors provide a visual testament to how each optimizer influences learning dynamics. Even in meta-analyses competing against baseline models, SNDA demonstrates a remarkable capability to correctly classify gestures.

Complexity, Inference, and Training Time

Delving deeper into the computational complexity reveals that the SNDA model, leveraging a DenseNet121 backbone, comprises approximately 11 million parameters. The parameters present the architecture’s efficiency in feature propagation while ensuring higher accuracy in recognizing ASL gestures.

Further, the inference time illustrates practical deployment considerations. With real-time ASL recognition essential for effective communication, the streaming analyses demonstrating inference durations affirm the model’s practicality in varied computational environments, including GPUs and edge devices.

Implications of the Findings

The overarching implications of the findings underscore the transformative potential of machine learning in enhancing communication between the Deaf and hard-of-hearing community and the broader public. The statistical backing for significant improvements in accuracy and efficiency positively contributes to bridging gaps in communication that have long affected this community.

Overall, the datasets discussed, combined with innovative model architectures like SNDA, create a robust framework to advance ASL recognition technologies, heralding a new era of inclusivity and effective communication assistance.

The examination of datasets in ASL recognition focuses not only on their structure and performance but also on the broader impacts they entail in promoting inclusivity and enhancing communication for the Deaf and hard-of-hearing communities.