Harnessing Multimodal Transformers for Concreteness Ratings

In an era where the integration of artificial intelligence and linguistics is pivotal, we present an innovative approach to automatically generate concreteness ratings using cutting-edge techniques in multimodal transformers and emotion-aware language models. This initiative not only enhances linguistic research but can also be invaluable for applications in education, cognitive science, and natural language processing. Users can explore our tool freely at concreteness.eu.

Methodology Overview

Our methodology is built upon four primary components:

-

Dual-Embedding Model Architecture: This unique design fuses visual-linguistic and emotional information, creating a more holistic understanding of language.

-

Training Procedure: We utilize large-scale, human-annotated datasets that empower our models to learn the nuances of language.

-

Evaluation Metrics: A rigorous assessment framework ensures that our predictions are both accurate and reliable.

- General Prediction System: This versatile mechanism can generate concreteness ratings for single words and multi-word expressions across various languages.

The Inspiration Behind Our System

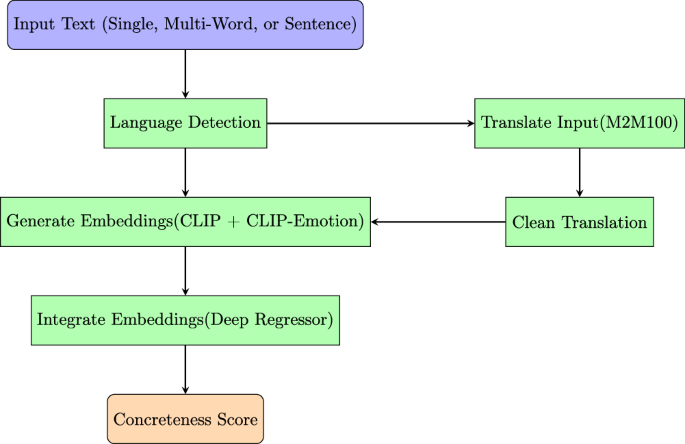

Figure 1: System Architecture for Generating Concreteness Ratings

Our approach starts with input text, which may be a single word, a multi-word expression, or a complete sentence. This input undergoes a series of transformations, including language detection and, if necessary, translations for non-English inputs. After this preprocessing, embeddings generated by our models produce the final concreteness score. Notably, if the input is a sentence, we can generate a concreteness rating for each individual word.

Model Architecture Breakdown

Dual-Embedding Approach

The core of our model architecture lies in the integration of multimodal transformers with emotion-aware models through a dual-embedding approach. The architecture consists of three main components:

-

Base Visual-Language Model: We have adopted the Contrastive Language-Image Pre-training (CLIP) model, which aligns text and visual content in a shared embedding space. By leveraging the powerful transformer-based text encoder and the ViT-B/32 variant, we can represent both visual and textual data effectively.

-

Emotion-Aware Language Model: Fine-tuning CLIP with an emotion-focused dataset enhances its ability to understand emotions linked with language. By training on the Affection dataset, which includes over 85,000 emotionally annotated images, our extended CLIP model can interpret emotional contexts in both images and words.

- Deep Regressor: This neural network merges the embeddings from both the base and emotion-aware models, leading to precise predictions of word concreteness. The architecture comprises multiple hidden layers that help capture complex relationships.

Detailed Training Procedure

For the training process, we relied on the concreteness ratings dataset from Brysbaert et al., which provides human-annotated ratings for over 37,000 words and nearly 3,000 two-word expressions. The rigorous training regimen included data cleaning, stratified sampling to retain distribution, and embedding generation through our dual models.

Training Cohorts

Words are processed to create 512-dimensional embeddings that are concatenated into 1024-dimensional vectors. The training uses a combination of techniques to minimize overfitting and ensure robust performance by employing regularization strategies.

Specific care was taken in setting hyperparameters, using the Adam optimizer with a carefully-tuned learning rate and early stopping techniques to navigate the complexities of model training effectively.

Rigorous Evaluation Metrics

To validate our model’s performance, we employed several metrics:

-

Pearson Correlation Coefficient: This helps us measure the linear relationship between predicted scores and actual ratings.

-

Coefficient of Determination (R²): Indicates the variance in concreteness ratings predictable from our model.

- Mean Absolute Error (MAE) and Root Mean Square Error (RMSE): Both metrics quantify the accuracy of our predictions, with MAE capturing average errors and RMSE emphasizing larger discrepancies.

Each metric provided insight into the model’s performance, allowing us to refine and improve prediction accuracy based on systematic feedback.

Building a General Prediction System

Our general prediction system is designed to function seamlessly with both single and multi-word expressions. By integrating models trained on distinct datasets for single words and multi-word expressions, we can handle a diverse array of linguistic inputs.

Routing Mechanism

An intelligent routing mechanism identifies whether inputs are single or multi-word expressions, directing them accordingly. This model accommodates compound words and optimizes GPU resources for efficient computation.

Extension to Non-English Inputs

Recognizing the global landscape of language, we included a cross-lingual component that utilizes a multi-stage translation process. A language detection module is employed followed by translation using the M2M100 transformer model, ensuring our approach is adaptable for various linguistic contexts without a reliance on English as an intermediary.

The translation pipeline incorporates essential preprocessing and normalization steps, ensuring consistency post-translation. This robust handling establishes a scalable system that accommodates linguistic diversity while maintaining accuracy.

Efficient Batch Processing and Error Management

Utilizing advanced memory management techniques, we optimize data handling for large datasets. We employ techniques like gradient accumulation and dynamic batch size adjustments to prevent memory overuse.

Moreover, our system is fortified with comprehensive error-handling strategies, capable of gracefully managing unexpected inputs or processing errors. We actively integrate fallback mechanisms and logging for future improvements, thereby ensuring reliability and robustness in operations.

Implementation Details

All model implementations utilize PyTorch, with training expedited through NVIDIA GPU acceleration. Our training adopted best practices, allowing for reproducibility and efficiency.

For researchers interested in exploring or enhancing this work, we offer our codebase, including data preprocessing scripts, model definitions, and training routines on GitHub.

This structured approach to generating concreteness ratings reflects a significant advancement in understanding language and its emotional contexts, opening new avenues for research and practical applications alike.