Understanding the Development of a Deep Learning Model for Diagnosing Dysphagia: A Retrospective Study

Institutional Approval and Ethical Considerations

In a recent study approved by the Institutional Review Board (IRB) of Yeungnam University Hospital, researchers embarked on an innovative journey to enhance the diagnosis and management of patients with swallowing difficulties. This retrospective study was conducted in strict compliance with the ethical guidelines of the Declaration of Helsinki. Notably, the IRB waived the requirement for informed consent due to the retrospective nature of the study, allowing for a more streamlined approach to data collection and analysis.

Patient Selection and Criteria

The study encompassed all videofluoroscopic swallowing study (VFSS) data from patients diagnosed or monitored for dysphagia between March 2019 and July 2022. Researchers carefully selected participants based on specific inclusion criteria while excluding individuals under 20 years of age, those with tracheostomies, patients with facial or cranial anomalies, and individuals possessing metal plates in the VFSS evaluation field. This meticulous selection process aimed to create a focused dataset applicable to the adult dysphagia population.

The Videofluoroscopic Swallowing Study (VFSS)

VFSS, a key procedure for evaluating swallowing difficulties, was performed with patients seated upright to ensure optimal imaging conditions. Utilizing a contrast medium, Bonorex 300 injection, the study captured images at 30 frames per second. Although VFSS typically incorporates various food-contrast mixtures, the study focused on analyzing only the initial 3 mL liquid swallow to mitigate any impact of residual contrast during image evaluation.

Development of the Deep Learning Model

The centerpiece of this study was the creation of a deep learning model for automated dysphagia diagnosis. Researchers employed modern programming languages and libraries, including Python 3.10.15 and PyTorch 2.14.1, to develop the model. Utilizing a custom Python script, they extracted frames from VFSS videos, converting original MPG files into individual frames, specifically at a rate of 10 frames per second.

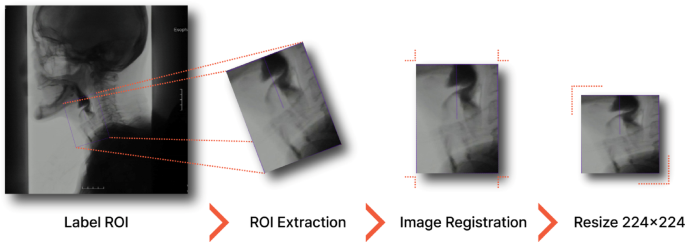

The ReDet object detection model was pivotal for extracting regions of interest (ROIs) from these frames. This particular model possesses inherent advantages—its rotation equivariance allowed for accurate identification of anatomical structures despite variations in patient positioning. As a result, the extraction of key spatiotemporal information from VFSS sequences substantially enhanced the reliability and precision of the assessments that ensued.

Frame Selection and Peak Detection

To streamline the training of the deep learning model and ensure the relevance of frames, specific extraction criteria were employed. Frames capturing the hyoid bone at its highest and lowest positions during swallowing were systematically selected. Previous studies guided these methodologies, with a notable shift in this research toward a more automated selection of peak frames. Utilizing the EfficientNetB0 CNN architecture, researchers trained a classification model to identify high and low peak frames, empowering the deep learning model to accurately reflect the dynamics of swallowing.

Annotation and Dataset Composition

Each extracted image from the VFSS was meticulously labeled by a seasoned physiatrist with over 15 years of clinical expertise in dysphagia diagnosis. The labeling categorized images into three distinct classes: “normal” (no penetration or aspiration), “penetration” (contrast above vocal cords), and “aspiration” (contrast below vocal cords). Notably, researchers faced class imbalances—while 63.9% of images were categorized as normal, penetration and aspiration constituted 21.4% and 14.7%, respectively. To address this disparity, they implemented the Synthetic Minority Oversampling Technique (SMOTE), which generated synthetic samples for the minority classes, thus enhancing model accuracy for less frequent conditions.

Advanced CNN Architecture for Classification

For the intricate task of classifying penetration and aspiration events, the research team opted for the ConvNeXt-Tiny CNN architecture. This architecture exhibited promising capabilities and was optimized using the AdamW optimizer, thereby improving generalization through dropout and batch normalization layers. To further augment the model’s performance and accelerate training, the integration of the DeepSpeed library allowed for larger batch sizes.

Rigorous Model Training and Evaluation

To ensure the performance of their model, researchers adopted a patient-level dataset split, distinguishing clear boundaries between training (80%) and validation (20%) sets. This practice fortifies against overfitting and enhances the validity of the results by preventing data leakage. The adoption of micro-averaging and macro-averaging techniques provided diversified insights into the model’s efficacy, addressing the common challenges posed by class imbalance in medical image analysis.

Diagnostic Criteria and Statistical Analysis

The diagnostic parameters established within the study were particularly robust: aspiration was defined by its presence in at least two images, penetration in one aspiration image and two penetration images, and normal conditions reflecting low counts of both. Statistical analyses employed various performance indicators, including accuracy, precision, and recall, facilitating a comprehensive evaluation of the model’s diagnostic capabilities.

Through the lens of advanced deep learning methodologies, this study not only illustrates the importance of innovation in the clinical evaluation of dysphagia but also highlights the complex interplay of data integrity, model sophistication, and clinical expertise in advancing healthcare practices. The ongoing exploration into automated diagnostic frameworks signifies a pivotal step toward improving patient outcomes in dysphagia management.