Advancements in Fire Detection: A Five-Stage Network Architecture

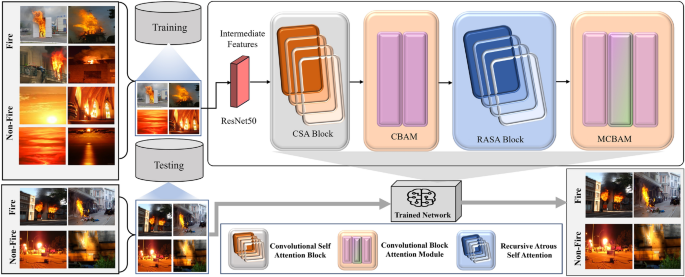

The landscape of fire detection technology is rapidly evolving, driven by advancements in deep learning and computer vision. This article delves into a proposed network architecture designed to enhance fire detection capabilities. The architecture, illustrated in Fig. 1, consists of a systematic five-stage process: ResNet50 → CSA → CBAM → RASA → MCBAM → Classification. Each component plays a crucial role in the refinement and optimization of feature representations necessary for effective fire detection.

Feature Extraction with ResNet50

The backbone of this proposed network is ResNet50, a well-regarded architecture in the realm of deep learning. ResNet50 processes images of size (256 \times 256 \times 3) to extract deep semantic features. From its conv5_x output, it generates feature maps with dimensions (8 \times 8 \times 2048), representing a rich repository of semantic information. The design is intentionally chosen to balance efficiency and effectiveness—maintaining an (8 \times 8) spatial resolution enables manageable calculations, while the 2048-dimensional feature vectors capture high-level semantic details necessary for subsequent stages.

The mathematical formulation encapsulating this feature extraction can be represented as follows:

[

\textbf{F} = \text{ResNet50}(\textbf{I})

]

where (\textbf{I} \in \mathbb{R}^{B \times 3 \times 256 \times 256}) denotes the input images, and (\textbf{F} \in \mathbb{R}^{B \times 2048 \times 8 \times 8}) are the extracted features.

Convolutional Self-Attention (CSA) Module

Building upon the extracted features from ResNet50, the Convolutional Self-Attention (CSA) module aims to improve feature representation through a combination of local feature enhancement and global context modeling. Utilizing the high-dimensional semantic features from ResNet50, the CSA module first employs depthwise separable convolutions to capture spatial patterns efficiently. This is followed by the generation of query, key, and value matrices, which form the foundation of the attention mechanism.

Several key components contribute to the CSA’s functionality:

- Local Context Extraction: The feature map from ResNet50 undergoes a depthwise (3 \times 3) convolution, preserving spatial patterns while enhancing local features.

[

\textbf{F}{\text{local}} = \text{DWConv}{3 \times 3}(\textbf{F})

]

-

Query, Key, Value Generation: Three parallel (1 \times 1) convolutions create query, key, and value representations, crucial for the self-attention mechanism.

- Multi-Head Attention Computation: The Q, K, and V tensors are reshaped for multi-head attention processing, which adds an extra dimension of attention capabilities, allowing the network to concentrate on the most relevant features across the entire space.

This innovative module significantly enhances the representational power of the extracted features.

Attention Mechanisms: CBAM and MCBAM

The next component in our architecture is the Convolutional Block Attention Module (CBAM). This module refines feature maps by integrating both spatial and channel-wise attention mechanisms. The CBAM operates on the refined output from the CSA module, allowing the network to weigh the significance of different features based on both local context and overall importance.

To improve computational efficiency, the traditional (7 \times 7) convolutions are replaced with a combination of (1 \times 1) and (3 \times 3) convolutions, yielding:

[

M{\text{Sa}} = f^{1 \times 1}(f^{3 \times 3}(j\phi{\text{Sa-avg}} \oplus j\phi_{\text{Sa-max}}))

]

This modification not only reduces the computational burden but also helps maintain essential spatial features.

Following CBAM, the Modified CBAM (MCBAM) is applied to the outputs from the Recursive Atrous Self-Attention (RASA) module. MCBAM incorporates the same principles used in the original CBAM but focuses on optimizing the multi-scale features delivered by the RASA.

Recursive Atrous Self-Attention (RASA)

The RASA module stands out by merging recursive processing and multi-scale context modeling. Utilizing atrous convolutions with varying dilation rates (1, 3, and 5), RASA captures multi-dimensional context effectively.

One of the core components of RASA is the Atrous Self-Attention (ASA), which leverages multi-scale integration. The queries, derived from the enhanced features of the previous layer, utilize siLU activation to refine their representations further.

The recursion process enhances the feature representation continuously, where outputs from previous iterations feed into the next:

[

i{m+1} = \text{ASA}(f({\textbf{F}}{\text{CBAM}}, H_{m-1}))

]

Here, the recursion is kept to two iterations, striking a balance between computational feasibility and performance enhancement.

Conclusion

The proposed five-stage architecture offers a robust framework for fire detection, utilizing deep learning technologies to enhance feature extraction and representation. Through the collaborative functioning of ResNet50, CSA, CBAM, RASA, and MCBAM, the model is designed to manage complex fire detection tasks more effectively. Each module refines the features progressively, focusing on local and global contexts, ultimately leading to higher accuracy in challenging fire detection scenarios.

As the field of computer vision advances, these innovations hold promise for transformational applications in fire safety and emergency response.