Overview of the Data and Methods for Generating Segmentation Masks of Atmospheric Rivers, Tropical Cyclones, and Blocking Events

Introduction

Understanding extreme weather events such as Atmospheric Rivers (ARs), Tropical Cyclones (TCs), and blocking patterns is essential for effective climate modeling and prediction. The complex nature of these phenomena requires robust datasets for accurate analysis. In this article, we present a detailed overview of the data and methods used to develop our dataset of segmentation masks for ARs, TCs, and blocking events. We will explore the sources of our reanalysis data, the preparation that precedes labeling, the labeling process itself, and how we package the final dataset.

Reanalysis Data

Our dataset is built using the European Centre for Medium-Range Weather Forecasts Reanalysis version 5 (ERA5) dataset, which is publicly accessible via the Copernicus Climate Data Store. This comprehensive dataset includes numerous variables relevant to extreme weather events, sampled at hourly intervals for ARs and TCs, and daily intervals for blocking events.

For ARs and TCs, we selected images displaying total column water vapor (TCWV), vertically integrated water vapor transport (IVT), and mean sea level pressure (MSLP) between 1980 and 2022. In total, we focused on 9,850 uniformly sampled timesteps. For blocking events, we utilized consecutive daily timesteps covering the period from 2000 to 2013, emphasizing the temporal nature of these phenomena, wherein consistent record-keeping is vital.

To avoid historical biases, we limited our dataset to post-1980, when satellite observation technologies began to provide reliable data. This ensures the integrity and accuracy of our dataset.

Labeling Tool and Crowd-Sourcing Annotators

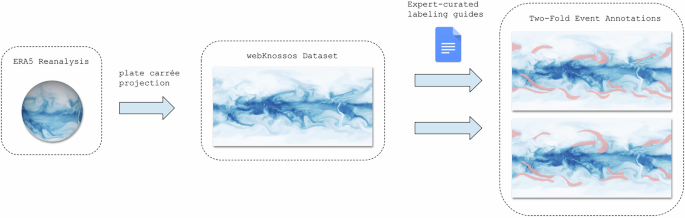

Labeling data for extreme weather events is a complex and specialized task that poses numerous challenges, particularly when scaling. Standard crowd-labeling approaches often fall short due to the required expertise and the unique characteristics of atmospheric data. To tackle these issues, we collaborated with webKnossos, a platform initially designed for biomedical image segmentation.

The webKnossos framework allows us to present complex, multi-channel data effectively. It includes a sophisticated system to monitor progress, update instructions based on user feedback, and flag potential quality concerns. By utilizing a range of features—including adjustable scales for the variables like IVT—we equip annotators with the tools they need to identify ARs and TCs efficiently.

Each timestep in our dataset contains multiple channels for examination, streamlining the annotation process. The diversity in the time taken to label individual timesteps underscored the differing levels of familiarity that crowd-labelers had with the events, with some completing annotations in under a minute while others required over ten minutes.

Labeling Guides

To ensure consistency and quality in the labeling process, we developed comprehensive guides based on the insights from climate experts focused on ARs, TCs, and blocking events. These guides include essential background information on each weather phenomenon, step-by-step instructions tailored to the webKnossos platform, and illustrative examples to guide the annotators.

Continuous interaction with the crowd-labelers was a vital aspect of our approach. By iteratively updating the guides based on incoming label quality and annotator questions, we fostered an environment conducive to learning and clarity. This balancing act allowed for objective labeling while avoiding unnecessary bias in the definition of extreme events.

The guides also included specific instructions for various event types. For example, AR annotators were encouraged to identify areas with high TCWV and IVT and to focus on long, narrow columns indicative of AR presence. In contrast, TC annotators relied on MSL to detect low-pressure areas and were instructed to look for spiraling features characteristic of TCs.

Data Packaging

The ERA5 reanalysis data is structured on a regular latitude-longitude grid at a resolution of 0.25° × 0.25°. For effective annotation, we processed the NetCDF ERA5 data into Python arrays, applying a plate carrée equirectangular projection that aligns with the grid structure of the data set. This preparatory work allows seamless integration into the webKnossos interface.

Post-annotation, the labeled data is packaged into user-friendly NetCDF files. Each type of extreme weather event is organized distinctly—individual timesteps for ARs and TCs, and sequences of 10 consecutive days for blocking events. This structured approach facilitates easy access and enhances usability for future analyses.

Data Overview

In this section, we delve into the comparisons of our hand-labeled extreme weather events against existing observational datasets and detection methodologies. This examination is crucial for validating the reliability and accuracy of our labeled dataset.

Starting with ARs, we compared our labels against the tARget algorithm, known for its continuous updates. While there was reasonable agreement in terms of high-activity zones (like the subtropical Pacific and Atlantic), our dataset consistently showed higher frequency percentages of over 30% in several oceanic regions compared to the tARget’s 10-12%. This discrepancy aligns with typical variations observed in manually annotated datasets, hinting at an inherent uncertainty in defining these events.

For TCs, our findings were juxtaposed against key studies such as those by Sobel et al. and Knapp et al. The spatial patterns of TC frequencies in our dataset align closely with known areas of high activity, reinforcing the validity of our annotations. Our frequencies were elevated, reflecting the segmented shape of TCs rather than just individual storm tracks.

Lastly, we assessed atmospheric blocking by comparing our results with a study by Pinheiro et al. Our findings corroborated their observations, showing good agreement in both magnitude and spatial distribution, especially during peak seasons.

Challenges and Limitations

While our dataset serves as a robust resource for machine learning applications and climate studies, we recognize inherent limitations. The labels primarily delineate areas of extreme events without additional details such as centroid locations, direction, or context-specific climate characteristics.

Furthermore, essential weather parameters like precipitation, wind speed, and temperature—integral to comprehensive event characterization—are not included in the labels but can be gleaned from the underlying reanalysis data available at corresponding time steps.

In summary, our ambitious project successfully maps ARs, TCs, and blocking events through an innovative combination of crowd-sourcing and expert guidance, yielding a dataset primed for further analysis and model training.