Harnessing AI for Mental Health Classification: Innovations in NLP and LLM Models

Recent studies reveal exciting advancements in the intersection of artificial intelligence and mental health classification. The use of Natural Language Processing (NLP) models and fine-tuned Large Language Models (LLMs) has shown promising results in accurately classifying mental health conditions based on text data. With findings indicating that the NLP model outpaced all other tested approaches, achieving an impressive accuracy of 95%, the implications for mental health diagnostics are profound.

Performance of the Models

In the competitive landscape of mental health classification, the NLP model didn’t just excel; it became the benchmark. Following closely was the fine-tuned GPT-4o-mini model, which managed a solid accuracy of 91%. In contrast, the prompt-engineered GPT-4o-mini model lagged behind, achieving only a 65% accuracy. This underperformance can be attributed to its insufficient domain-specific adaptation, leaving it unable to grasp the nuanced language of mental health expressions fully.

Mental health expressions often contain subtle linguistic patterns that may seem ambiguous without the right context. Without the benefit of fine-tuning on domain-specific data, the prompt-engineered model struggled to distinguish between closely related conditions, like anxiety and depression, which share overlapping yet distinctive language characteristics.

Fine-tuning proved beneficial for the GPT-4o-mini model, particularly in tackling complex categories like Personality Disorder, which typically trip more generalized models. Peak performance of 91% was achieved after three epochs, but this figure declined due to potential overfitting—a scenario where the model becomes overly tailored to its training data, capturing unnecessary noise that can hinder its performance across unseen data.

The NLP model’s unparalleled accuracy demonstrates its capabilities, particularly in the classification of conditions like Anxiety and Depression. Despite facing some challenges with rarer conditions, its overall efficiency and local operational advantages make it an appealing choice for sensitive applications.

Comparing NLP and Fine-Tuned LLM Models

The study implemented a stratified train-test split to ensure unbiased evaluation, allowing each model to be trained and tested on balanced datasets reflective of all mental health conditions. As a result, meaningful performance metrics were achieved for both models.

Notably, the NLP model consistently showed high precision, achieving remarkable scores in conditions typically difficult to classify, such as Personality Disorder (99%) and Bipolar Disorder (98%). This highlights its skill in minimizing false positives, thus improving the overall robustness of the classification.

Conversely, while the fine-tuned model exhibited superior precision in more frequent categories, it lagged in conditions like Suicidal tendencies and Personality Disorder. The stratified approach of the dataset ensured these variances truly represented the models’ capabilities rather than imbalances in the data itself.

When evaluating recall, the fine-tuned model shined in capturing true positives for underrepresented conditions, achieving notable recall scores for Personality Disorder (94%) and Bipolar Disorder (94%). Meanwhile, the NLP model excelled in conditions like Depression and Suicidal tendencies, further emphasizing the necessity of context-sensitive adjustments.

Advantages of the NLP Model

The NLP model walks away with several compelling advantages beyond mere accuracy:

-

Local Data Processing: Unlike LLMs that often rely on cloud-based processing, the NLP model operates fully locally. This is a monumental advantage in maintaining patient confidentiality, particularly in sensitive industries like mental health.

-

Interpretable Confidence Scores: The NLP model offers clear confidence scores, allowing healthcare professionals to understand the model’s certainty in its classifications. Transparency is critical for user trust in automated systems.

-

Efficiency: The smaller size and efficiency of the NLP model make it suitable for running on standard hardware. This feature is pivotal in real-time applications where speed and computational functionality are critical.

- Holistic Assessment: Instead of assigning a single diagnosis, the NLP model presents a distribution of confidence scores across multiple indicators. This aligns with a growing trend toward person-centered mental health care, recognizing the interconnectedness of various mental health traits.

Metrics in Mental Health Classification

In mental health classification, certain performance metrics are more significant depending on the conditions being evaluated. For instance, recall holds particular weight for high-risk conditions like suicidal ideation, where failing to identify true cases could have dire consequences.

Precision is crucial for scenarios demanding careful resource allocation, especially when rare conditions require focused intervention. Accuracy provides a broad overview of overall model performance, while the F1-score achieves a delicate balance between precision and recall, ensuring that both false positives and false negatives are accounted for.

Given the dataset’s inherent class imbalance, the F1-score is especially informative, capturing the challenges presented by underrepresented conditions. This highlights the importance of model performance relevancy in clinical settings.

Comparing Model Performance to Human Expertise

Recent studies have prompted discussions about whether NLP methods can rival human expertise in mental health assessments. One such study attempted to analyze cognitive distortions in text conversations between clients and clinicians, achieving F1-scores between 41% and 63%. By comparison, the NLP model under discussion achieved scores between 89% and 97% in similar text classification tasks.

However, it is essential to remember that while AI boasts impressive accuracy, it lacks the nuanced understanding and empathetic abilities inherent to human professionals. Thus, leveraging AI alongside human oversight offers a balanced hybrid approach. This method allows for more efficient assessments while still heeding the broader contextual factors vital for empathetic care.

Neurodiversity Considerations

One critical aspect often overlooked in mental health classification is the distinction between neurotypical and neurodiverse individuals. Variations in communication and emotional expression among conditions like autism and ADHD can significantly alter mental health expressions. Current models, without adapting to these differences, risk misclassifying or underrepresenting the unique experiences of neurodiverse populations.

Developing inclusive datasets that capture these nuances will be essential in enhancing the accuracy and reliability of mental health classification systems for all individuals.

Strengths and Limitations

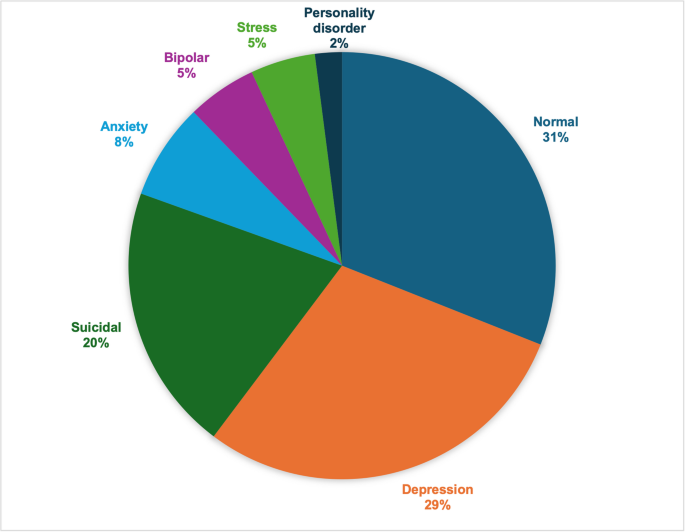

This study is commendable for its use of a diverse dataset comprising over 52,000 social media posts that allow thorough training and evaluation across numerous mental health conditions. It also emphasizes methodological transparency, facilitating future improvements.

However, the limitations are noteworthy. The reliance on social media for mental health clues is fraught with bias, given that it stems from self-reported indicators without clinical validation. There’s also the absence of neurodiversity considerations, potentially masking certain expressions of mental health.

A Roadmap for Future Research

The insights garnered from this study pave the way for future advancements in mental health classification:

-

Comprehensive Benchmarking of LLMs: Future studies should evaluate a broader range of language models, including newer and specialized ones aimed at mental health.

-

Clinically Validated Datasets: Creating datasets with professional clinical annotations would enhance the model’s reliability.

-

Neurodiverse-Inclusive Modeling: It’s crucial to incorporate datasets that acknowledge unique linguistic patterns from neurodiverse individuals.

-

Expanded Indicator Sets: The adaptation of models to accommodate additional mental health conditions would create a more refined classification system.

-

Ensemble Methodologies: Combining traditional NLP with fine-tuned LLMs through ensemble approaches might yield better classification results.

-

Clinical Implementation Studies: Validating AI models in clinical settings would better establish their practical utility and performance thresholds.

-

Longitudinal Monitoring Systems: Developing frameworks capable of tracking mental health changes over time would allow for timely interventions.

- Human-AI Collaborative Systems: Ultimately, merging algorithmic capabilities with human expertise can strike the right balance between technical innovation and empathetic patient care, enhancing the overall mental health landscape.

These future avenues highlight the importance of interdisciplinary collaboration among technologists, mental health professionals, and patient advocates in creating systems that are not only technically robust but also ethically sound and clinically beneficial.